ChatGPT-4o vs. ChatGPT-4: 5 biggest upgrades you need to know

What does the latest version bring to the table?

This week, OpenAI launched the latest version of its game-changing ChatGPT AI chatbot.

While it’s not ChatGPT-5, adding the ‘o’ — which stands for ‘Omni’ — at the end is all-important. It highlights that ChatGPT-4o is more comfortable with voice, text and vision interactions than ever before.

Here are the five most significant upgrades over its predecessor.

ChatGPT-4o is free for all

This is undoubtedly the main upgrade for casual users. Previously, the smarter GPT-4 was only accessible to those willing to fork out $20 per month for a Plus subscription. Now, thanks to improvements in its efficiency, OpenAI says that GPT-4o is free to every user.

That doesn’t mean there aren’t significant advantages in getting a paid subscription, however. Not only do paid users get five times the prompts per day (conversations will revert to the more limited GPT-3.5 once you run out), but the big voice mode improvements will initially be off-limits to free accounts (they're not here yet, but based on the demo, the voice and vision features will be a game-changer).

Big improvements to voice mode queries

GPT-4 has a voice mode, but it’s pretty limited. It can only respond to one prompt at a time, making it like a souped-up Alexa, Google Assistant or Siri. That has massively changed with GPT-4o, as the video below demonstrates.

It’s worth watching yourself, but in summary, ChatGPT is not only capable of coming up with a “bedtime story about robots and love” in jaw-dropping real-time, but it can also respond to interruptions asking for amendments on the fly. To the delight of the audience, GPT-4o is able to up the drama of its voice, switch to robotic tones and even cut to the chase and end the tale with a song.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Crucially, it responded to all these alterations without forgetting the main thread of the conversation — something that the best smart speakers just can’t handle right now.

Improved vision capabilities

The impressive voice mode presentation led to an even more impressive demo of the vision capabilities. GPT-4o was able to help solve a written linear equation captured via a phone camera in real time. Crucially, it did so without giving away the answer as requested.

At the end of the demo, the AI is flattered when “I ❤️ ChatGPT” is written down for it to ‘see’.

It’s not hard to figure out how this could be used in the real world — to explain some code or to summarize a foreign text in English. But it’s not just text: a second demo correctly detected happiness and excitement on the face of a fresh selfie.

At the moment, the improved vision capabilities seem to be aimed at static images. Still, in the near future, OpenAI believes GPT-4o will be able to do things with video — like, watching a sporting event and explaining the rules.

A whole lot faster

Although astonishingly quick, ChatGPT-4 certainly lets you see the cogs turn, especially on more complex queries. ChatGPT-4o is “much faster,” according to OpenAI, and it’s certainly noticeable in use.

If you want some actual timings on that, XDA Developers has delivered a couple of benchmarks.

A 488-word answer appeared in under 12 seconds in GPT-4o, while a similar response “would require nearly a minute of generation under GPT-4 at times”. It was also capable of generating a CSV in under a minute, while “GPT-4 took nearly as long to just generate the cities being used in the example.”

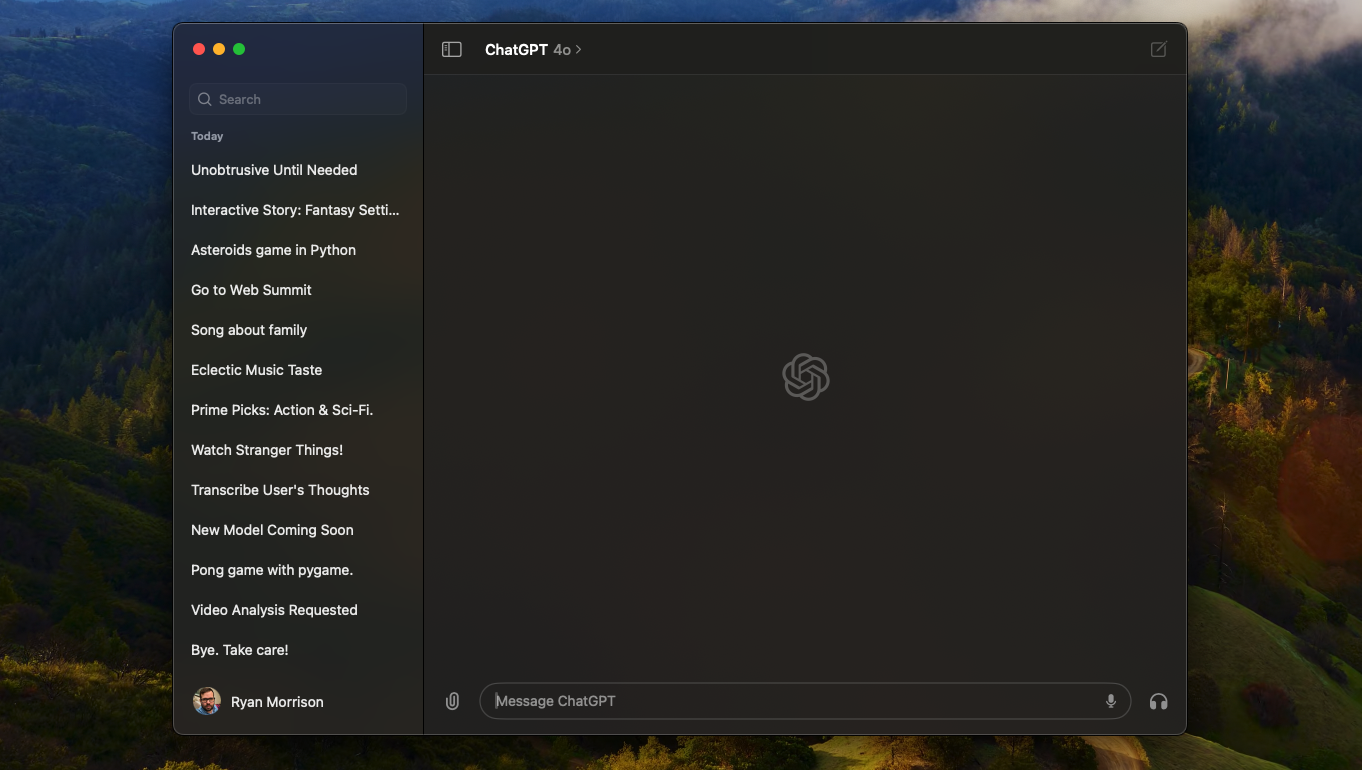

Native apps for Mac and (in time) Windows

The web version may well be enough for most people, but there’s good news for those who crave a desktop app.

OpenAI has released a dedicated Mac app that’s currently available in early access to Plus subscribers. It’s a staggered rollout, however, so you’ll have to wait until you get an email from OpenAI with a download link. Even if you do find a legitimate .dmg file, you won’t be able to use it until your account has been green-lit for use.

What about Windows? Well, OpenAI says that a Windows app should be ready by the end of 2024. Perhaps the delay is because Microsoft is still pushing Windows 11 users towards using the ChatGPT-powered Copilot.

More from Tom's Guide

- OpenAI and Reddit announce deal to bring ‘timely and relevant information’ to ChatGPT

- Gemini Live — what features are available now and what is coming soon

- Look out, Snapdragon — Nvidia, MediaTek may team up to make chips for AI laptops

Freelance contributor Alan has been writing about tech for over a decade, covering phones, drones and everything in between. Previously Deputy Editor of tech site Alphr, his words are found all over the web and in the occasional magazine too. When not weighing up the pros and cons of the latest smartwatch, you'll probably find him tackling his ever-growing games backlog. He also handles all the Wordle coverage on Tom's Guide and has been playing the addictive NYT game for the last several years in an effort to keep his streak forever intact.