Apple's new Depth Pro AI could revolutionise AR — capturing 3D space from a single image in just seconds

AI's going in-depth

Not a week goes by without something new in AI development pushing the technology forward, but this week's comes from a small tech company in Cupertino.

While all eyes are on Apple Intelligence and its eventual release which will bring context-specific AI features to everyday use, the company has also shown off a new AI model called Depth Pro.

As the name suggests, this new artificial intelligence model will map the depth of an image in real time. Where it is more exciting is in the fact it can do this on standard home computing hardware — no Nvidia H100's required.

Depth Pro is a research model, not something Apple is necessarily putting into production but if we ever get a pair of Apple Glasses, it would certainly help the company make augmented reality work better, or even improve the AR functionality of the Vision Pro.

Apple released an incredible ML Depth Model yesterday that creates a depth map in *meters* from a single imageI built a demo to play with it- Added ability to download depth map in meters- AND can generate a real-scale 3D object file of the scene(forked from a space by… pic.twitter.com/XdbtqN9Dp4October 6, 2024

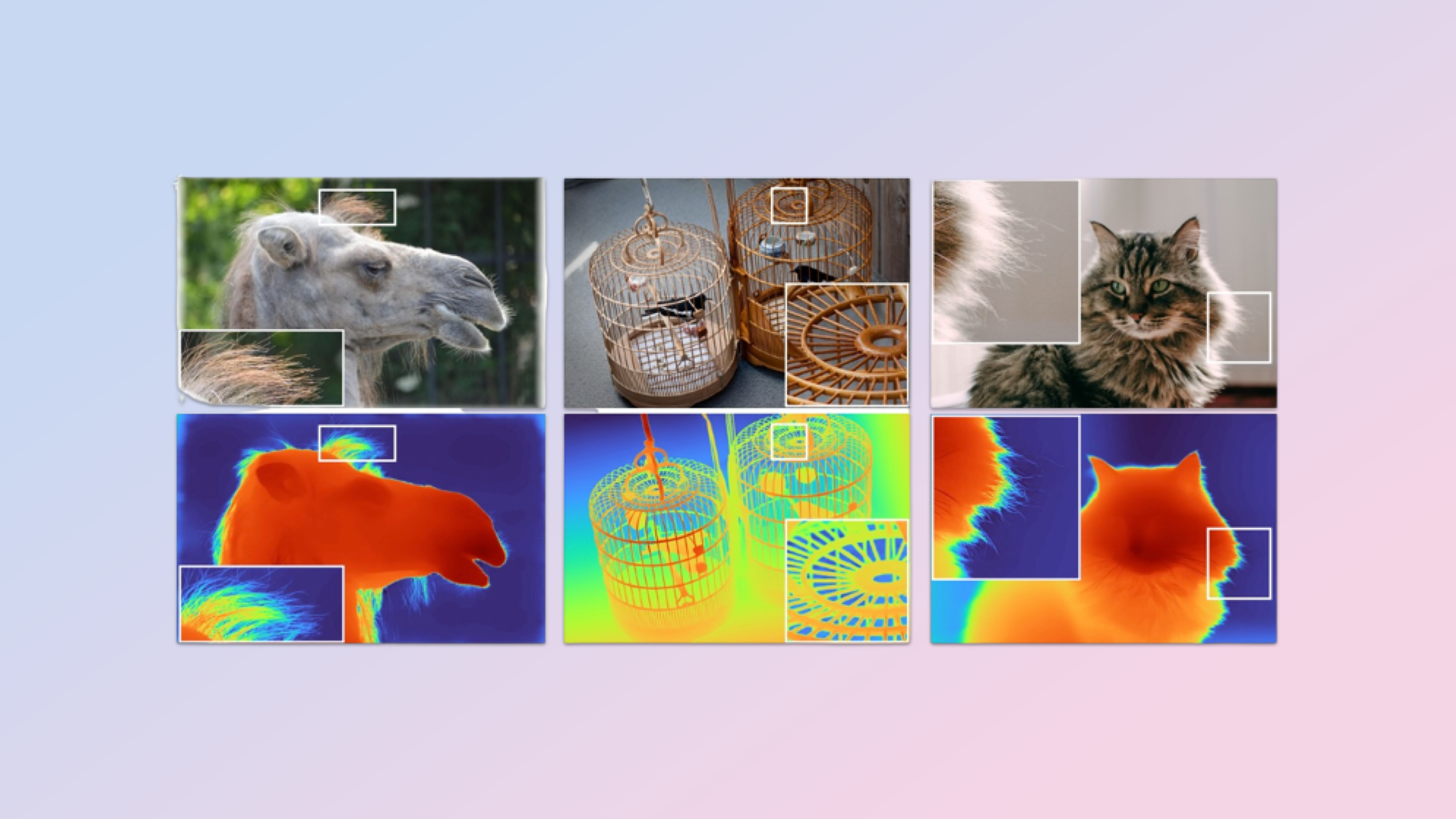

Apple's new model estimates relative and absolute depth, using them to produce "metric depth". This data can then be used, along with the image in a range of ways.

When a user takes a picture, Depth Pro draws accurate measurements between items in the image. Apple's model should also avoid inconsistencies like thinking the sky is part of the background, or misjudging the foreground and background of a shot.

How could Apple's new Depth Pro model be used?

The potential, Terminator 2 aside, is almost endless. Autonomous cars (ironically like Apple's canceled offering), drones, and robot vacuums could use accurate depth sensing to help improve object avoidance, while Augmented Reality tech and online furniture stores could help more accurately place items around a room — real or virtual.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Medical tech could be improved with depth perception, too, improving reconstruction of anatomical structures and mapping of internal organs.

It could go full circle, too, more accurately helping shift images to video using generative AI like Luma Dream Machine. This would work by passing the depth data to the video model along with the image to give it a better understanding of how to handle object placement and motion in that space.

More from Tom's Guide

- Apple Intelligence release date — here’s when all the AI features are coming

- I've been testing ChatGPT Canvas — here's why I think it's the most important AI tool of the year

- I’ve just seen the future of memes — Pika launches 1.5 and it can cake-ify anything

Lloyd Coombes is a freelance tech and fitness writer. He's an expert in all things Apple as well as in computer and gaming tech, with previous works published on TechRadar, Tom's Guide, Live Science and more. You'll find him regularly testing the latest MacBook or iPhone, but he spends most of his time writing about video games as Gaming Editor for the Daily Star. He also covers board games and virtual reality, just to round out the nerdy pursuits.