Apple takes on Meta with new open-source AI model — here's why it matters

Everything is open, including training data

Apple is fast becoming one of the surprise leaders in the open-source artificial intelligence movement offering a new 7B parameter model anyone can use or adapt.

Built by Apple's research division, the new model is unlikely to ever be part of an Apple product, beyond lessons learned during training. However, it is part of the iPhone maker's commitment to building out the wider AI ecosystem, including through open data initiatives.

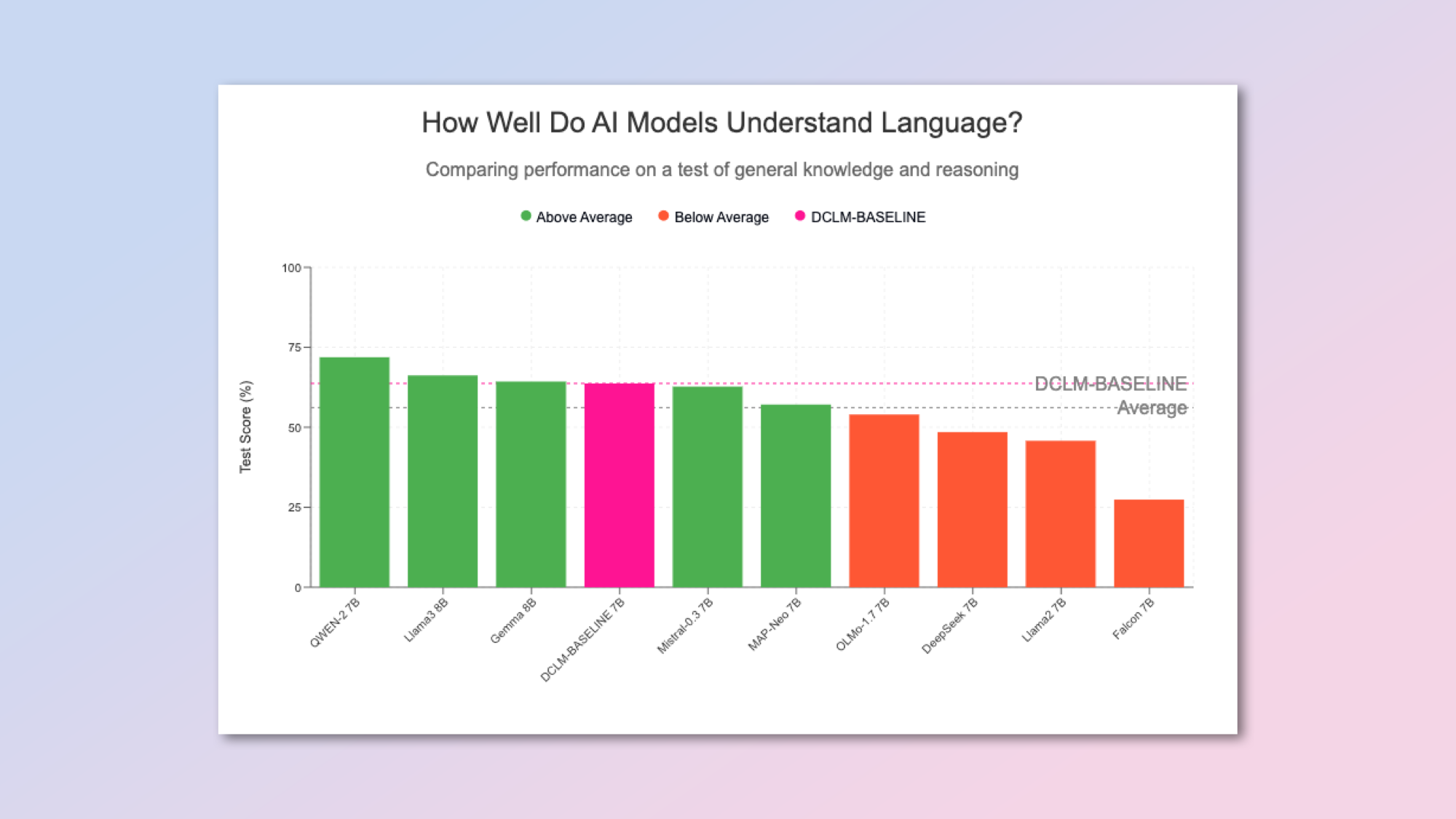

This is the latest release in the DCLM model family and has outperformed Mistral-7B in benchmarks, getting close to similar-sized models from Meta and Google.

Vaishaal Shanker from Apple's ML team wrote on X that they were the "best performing truly open-source models" available today. What he means by truly open-source is that all the weights, training code, and datasets are publicly available alongside the model.

This comes in the same week Meta is expected to unveil its massive GPT-4 competitor Llama 3 400B. It is unclear whether Apple is planning a larger DCLM model release in the future.

What do we know about Apple’s new model?

We have released our DCLM models on huggingface! To our knowledge these are by far the best performing truly open-source models (open data, open weight models, open training code) 1/5July 18, 2024

Apple's DCML (dataComp for Language Models) project involves researchers from Apple, the University of Washington, Tel Aviv University and the Toyota Institute of Research. The aim is to design high-quality datasets for training models.

Given recent concerns over data used in training some models and whether all of the content in a dataset was properly licensed or approved for training AI, this is an important movement.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

The team runs different experiments across the same model architecture, training code, evaluations, and framework to find out which data strategy works best to create a model that both performs well and is very efficient.

This work resulted in DCML-Baseline, which was used to train the new models in 7 billion and 1.4 billion parameter versions.

What makes the new models different?

This model is very efficient as well as fully open source. The 7B model performs as well as other models of the same size but was trained on far fewer tokens of content.

It does have a fairly small 2,000 token context window so won't be usable for large text summary but has a 63.7%, 5-shot accuracy on standard evaluation benchmarks.

Despite its small size and small context window, the fact all weights, training data and processes have been open-sourced makes this one of the most important AI releases of the year.

It will make it easier for researchers and even companies to create their own small AIs that could be embedded in research programs or apps and used without per-token costs.

Sam Altman, OpenAI CEO said of the release of the smaller GPT-4o mini last week that the goal is to create intelligence too cheap to meter — Apple’s project is part of that same ideal.

More from Tom's Guide

- Apple is bringing iPhone Mirroring to macOS Sequoia — here’s what we know

- iOS 18 supported devices: Here are all the compatible iPhones

- Apple Intelligence unveiled — all the new AI features coming to iOS 18, iPadOS 18 and macOS Sequoia

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?