Apple just unveiled new Ferret-UI LLM — this AI can read your iPhone screen

Is this part of Siri 2.0?

Apple researchers have created an AI model that can understand what’s happening on your phone screen. It is the latest in a growing line-up of models.

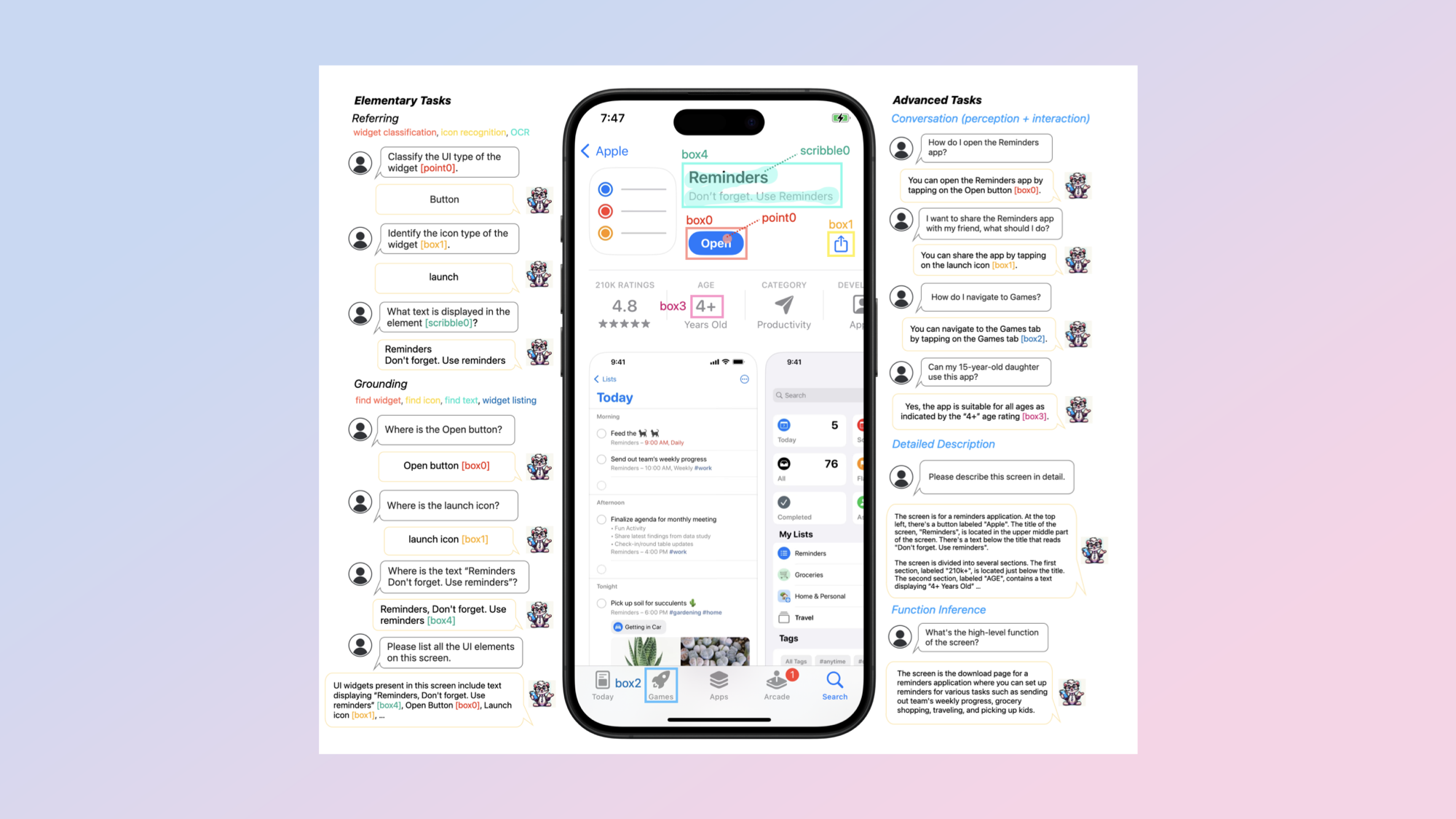

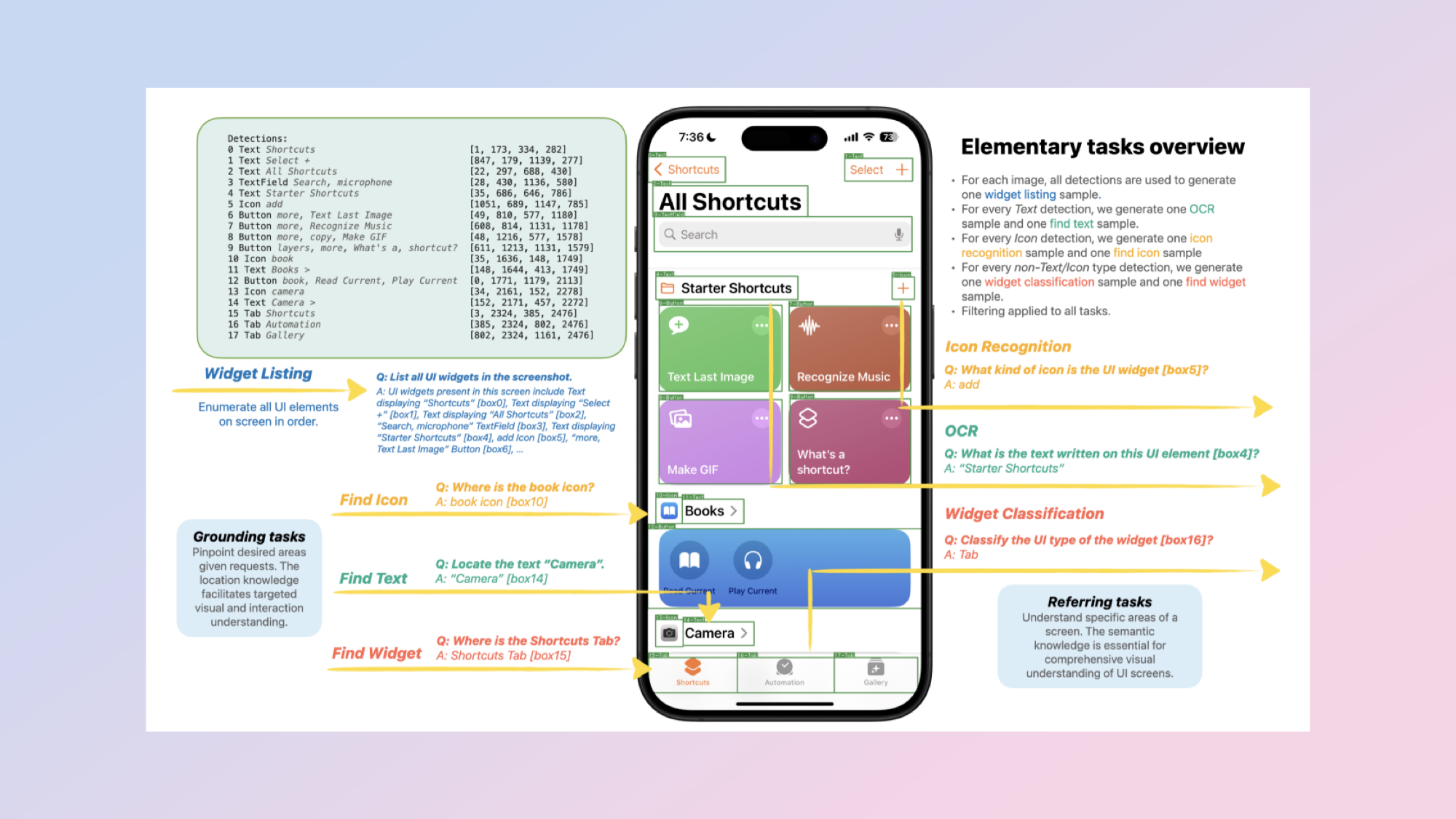

Called Ferret-UI, this multimodal large language model (MLLM) can perform a wide variety of tasks based on what it can see on your phone’s screen. Apple's new model can for instance identify icon types, find specific pieces of text, and give you precise instructions for what you should do to accomplish a specific task.

These capabilities were documented in a recently published paper that detailed how this specialized MLLM was designed to understand and interact with mobile user interface (UI) screens.

What we don't know yet is whether this will form part of the rumored Siri 2.0, or is just another Apple AI research project that goes no further than a published paper.

How Ferret-UI works

We currently use our phones to accomplish a variety of tasks — we might want to look up information or make a reservation. To do this we look at our phones and tap on any buttons that lead us to our goals.

Apple believes that if this process can be automated, our interactions with our phones will become even easier. It also anticipates that models such as Ferret-UI can help with things like accessibility, testing apps, and testing usability.

For such a model to be useful, Apple had to ensure that it could understand everything happening on a phone screen while also being able to focus on specific UI elements. Overall, it also needed to be able to match instructions given to it in normal language with what it’s seeing on the screen.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

For example, Ferret-UI was shown a picture of AirPods in the Apple store and was asked how one would purchase them. Ferret-UI replied correctly that one should tap on the ‘Buy’ button.

Why Ferret-UI is important?

With most of us having a smartphone in our pocket, it makes sense that companies are looking into how they can add AI capabilities tailored to these smaller devices.

Research scientists at Meta Reality Labs already anticipate that we’ll be spending more than an hour every day either in direct conversations with chatbots or having LLM processes run in the background powering features such as recommendations.

Meta's chief AI scientist Yann Le Cun goes as far as to say AI assistants will mediate our entire digital diet in the future.

So while Apple didn’t spell out what exactly its plans for Ferret-UI are, it’s not too hard to imagine how such a model can be used to supercharge Siri to make the iPhone experience a breeze, possibly even before the year is over.

More from Tom's Guide

- How to use voice record on an iPhone

- iOS 18 reportedly 'biggest' update in history — 9 upgrades coming to your iPhone

- iOS 17.5 beta — here's what's new

Christoph Schwaiger is a journalist who mainly covers technology, science, and current affairs. His stories have appeared in Tom's Guide, New Scientist, Live Science, and other established publications. Always up for joining a good discussion, Christoph enjoys speaking at events or to other journalists and has appeared on LBC and Times Radio among other outlets. He believes in giving back to the community and has served on different consultative councils. He was also a National President for Junior Chamber International (JCI), a global organization founded in the USA. You can follow him on Twitter @cschwaigermt.