I've been testing Apple Intelligence — 5 features I love and 4 I don't

After testing Apple Intelligence, I've seen some hits and misses with Apple's AI

Apple Intelligence hasn't officially landed on Apple devices just yet — it's coming to iPhones, iPads and Macs sometime this month. But you'll excuse me for feeling like Apple's initial suite of AI-powered tools is already here.

That's because I've been spending a lot of time with Apple Intelligence as of late, exclusively on iPhones running the iOS 18.1 beta, so that I can familiarize myself with the first batch of AI tools that will soon be available. And I've definitely formed some strong impressions about which features I'm looking forward to including in my daily workflow and which ones still need some tinkering in Cupertino.

First previewed this summer at Apple's WWDC 2024 event, Apple Intelligence marks Apple's first big push into artificial intelligence — an area where competitors like Samsung and Google already enjoy a substantial lede. Not every promised Apple Intelligence feature is debuting this month — the updates to iOS 18, iPadOS 18 and macOS Sequoia will bring new writing tools features, a Photo Clean up tool, better Photo searching, the ability to create slideshows from your photos library with a text prompt, changes to the Mail app and the first of what promises to be a series of updates to the Siri personal assistant that makes it more contextually aware.

Which of these new features are hits and which are misses? That's something everyone's going to decided for themselves. But in my Apple Intelligence testing, I've spotted several standout additions as well as few features that don't quite hit the mark.

Apple Intelligence features I love

Phone call recording and summaries

The ability to record calls on your iPhone isn't solely an Apple Intelligence feature — as of iOS 18.1 beta 4, Apple extended the capability to devices beyond the iPhone 15 Pro and iPhone 16 models that support Apple Intelligence. (For instance, I've been able to record phone calls and produce transcripts on my iPhone 12 just fine with the latest iOS 18.1 beta.) The only part of this feature limited to Apple Intelligence-capable devices is the ability to produce summaries of your recorded phone calls.

Regardless of which element you're using — the call recording in the Phone app, the transcripts that appear in the Notes app or the Apple Intelligence-exclusive summarize tool — this is one of the best-implemented features in the iOS 18.1 update.

Starting a recording on an iPhone call is a lot more streamlined than it is with the similar Call Notes feature on Google's latest Pixel flagships. (That said, I wish Apple would make the record button more visible, as it can blend into the background of lightly colored Contact Posters.) The transcripts, while not 100% accurate, do clearly identify who's speaking, even adding names if you're recording a call with someone on your contact list. And the summaries I've gotten accurately reflect what's been discussed in the call, making it easier to skim transcripts for the info you're looking for.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Anyone who's upgraded to iOS 18 should appreciate the addition of phone call recording features, and people with phones that support Apple Intelligence will like the added bonus of the summary feature.

Natural language searches in Photos

Let's be frank — the search functionality in Photos has stunk out the joint for years. You're essentially reduced to search for specific keywords, and even then, search results only sporadically turn up what you're looking for. Often, I find it easier just to scroll through my library of thousands of photos to find what I'm looking for rather than trust the vagaries of search.

But that's prior to Apple adding support for natural language searches with Apple Intelligence. Now, when I want to find photos of my daughter at a concert, I can type in that exact phrase and get what I'm looking for. Even better, while this is an Apple Intelligence feature, I did notice in my testing that my iPhone 12 running iOS 18.1 produced improved search results that often matched what I was able to find with the same search queries on an iPhone 15 Pro. In other words, the benefits of natural language search seem to have trickled to other phones running the iOS 18.1 update.

Professional tone in Writing Tools

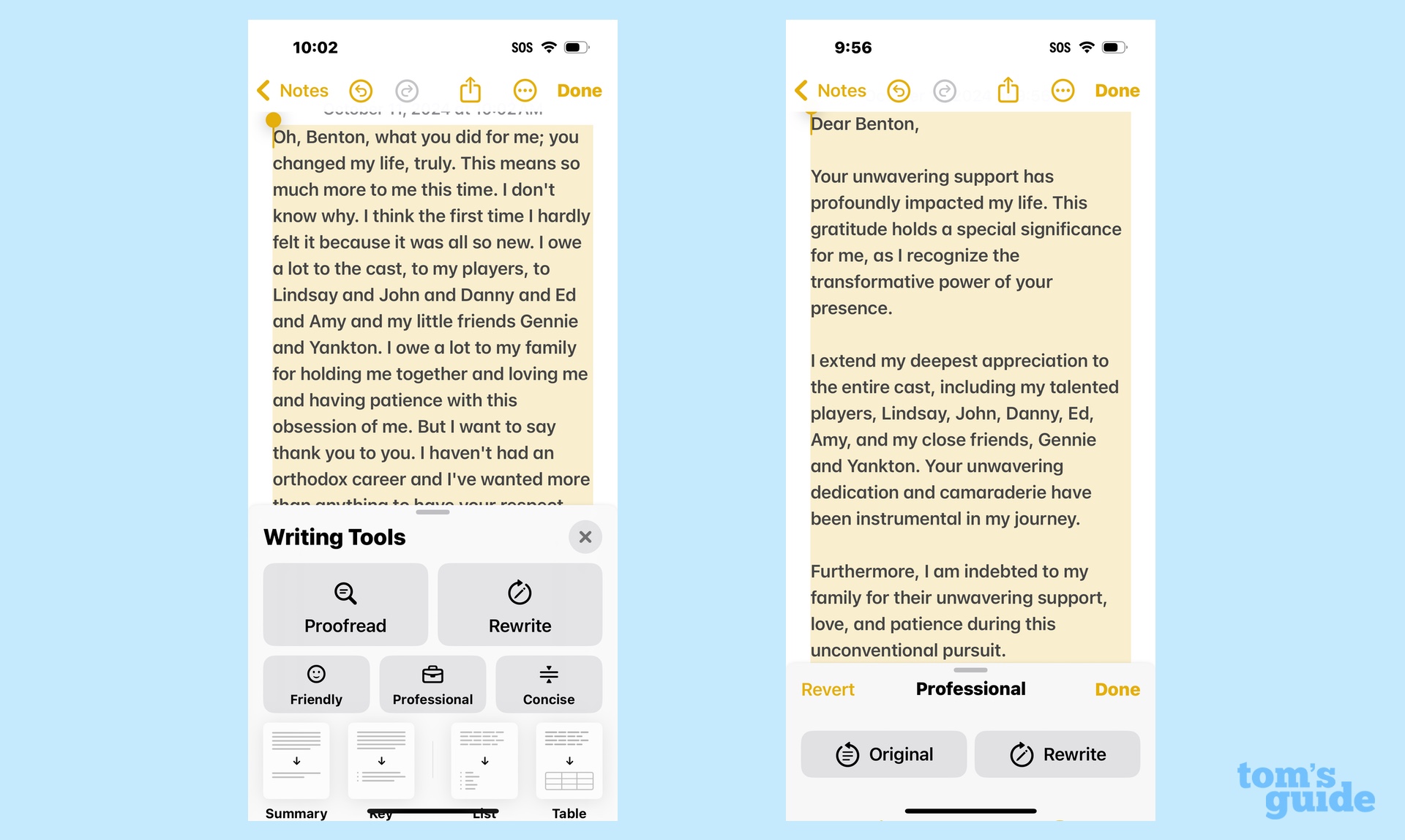

Not to get ahead of ourselves, but I'm pretty dubious as to the value of the Writing Tools features introduced in Apple Intelligence. Writing Tools covers a wide swath of capabilities, some of which are valuable (a proofreading feature, for example) and some of which are less so. (We'll get to the one that bugs me later.)

But Writing Tools also includes buttons that can make changes in tone to what you've written. And it turns out the one that gives your writing a Professional tone is shockingly good.

Maybe that shouldn't be a surprise. Formal writing for things like cover letters and business correspondence is really about following certain rules that are easy to train AI to obey. Nevertheless, I subjected a sloppily constructed cover letter to the Professional tone in Writing Tools, and the feature whipped the letter into shape, producing a letter I wouldn't be averse to sending to a real employer. Similarly, the Professional tool managed to condense a fairly rambling list of thanks into a coherent thank you note.

Not every Writing Tool capability is going to be one to turn to — not without some intervention on your part, at least. But the Professional tone is really polished at this point, and it can make your correspondence sound more business-like if that's not a skill set you've developed over the years.

Priority Mail

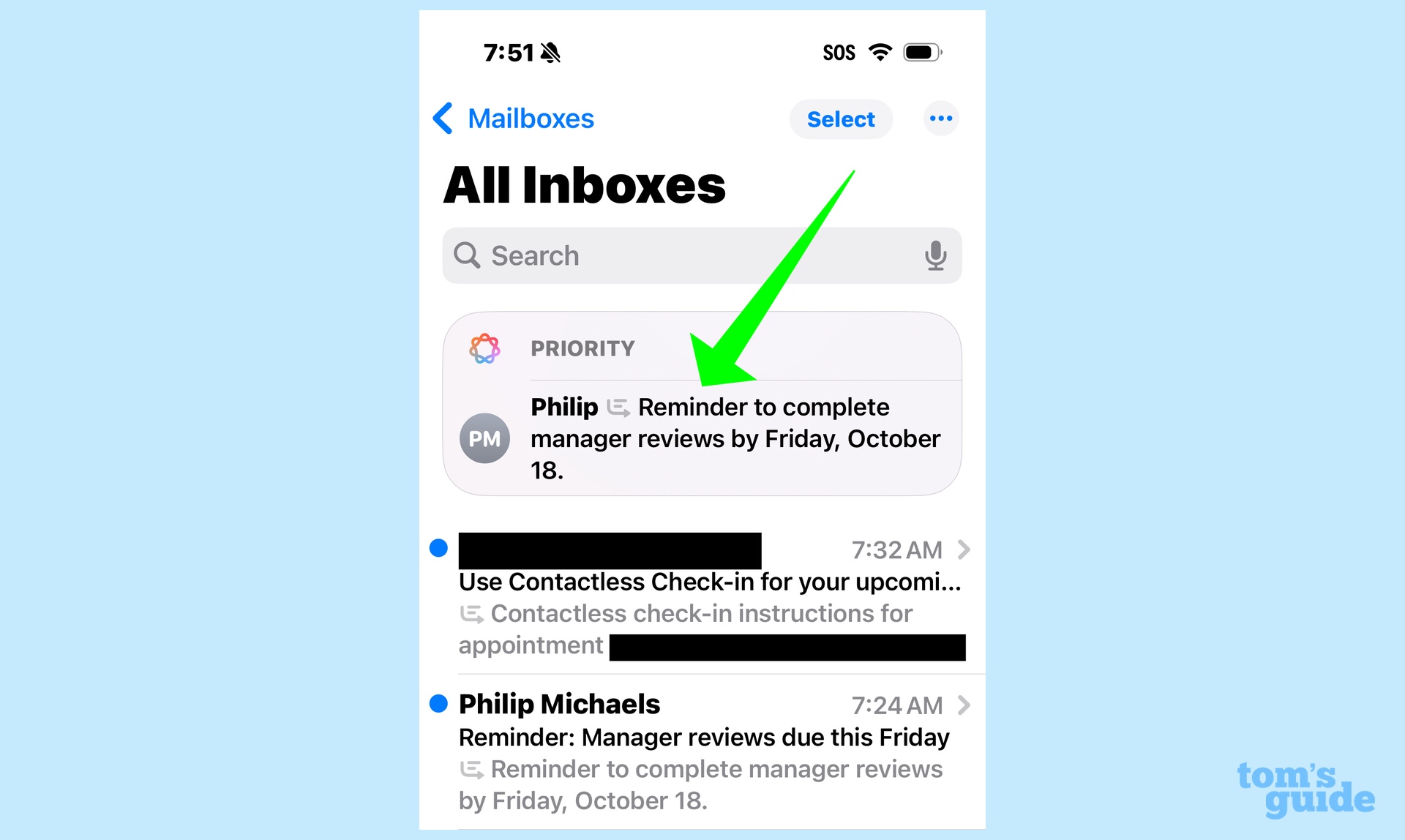

Bigger changes are coming to the Mail app later this year, when software updates will split up your inbox into different sections for personal mail, receipts, offers and newsletters. But the first batch of Apple Intelligence features includes Priority Mail, and it's a welcome addition that should help keep you on top of things.

When you get a message with a call to action or deadline, Priority Mail is designed to float that email to the top of your inbox, making it less likely that you'll miss that important message. Not only that, but there are visual cues too — key emails will have a Priority label at the top and they'll be set apart in a box that's separate from the rest of your inbox. Good luck saying that letter escaped your attention.

Mail summaries

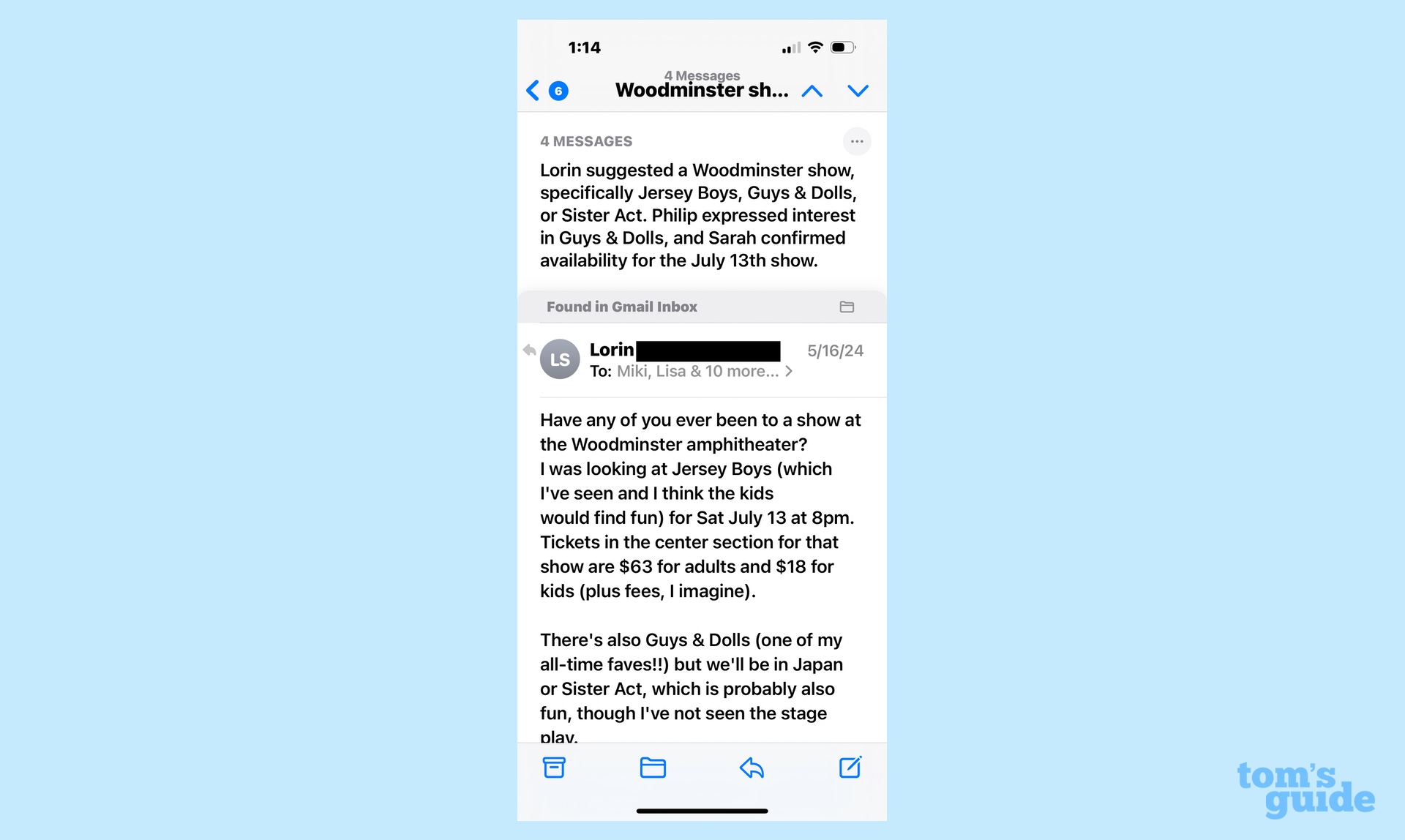

While we're on the subject of positive changes to the Mail app, let's talk about the summary tool powered by Apple Intelligence. At the top of each email, there's a button you can tap that will produce a summary of the message's contents.

Obviously, that's not a tool you're going to need for every letter. But on long email chains with a lot of back and forth, the AI-generated summaries are a very effective way of boiling down messages to their essential takeaways. A summary of a 12-message conversation featuring multiple people weighing in can help you find the exact info you're looking for without having to skim each individual reply.

Apple Intelligence features that need more work

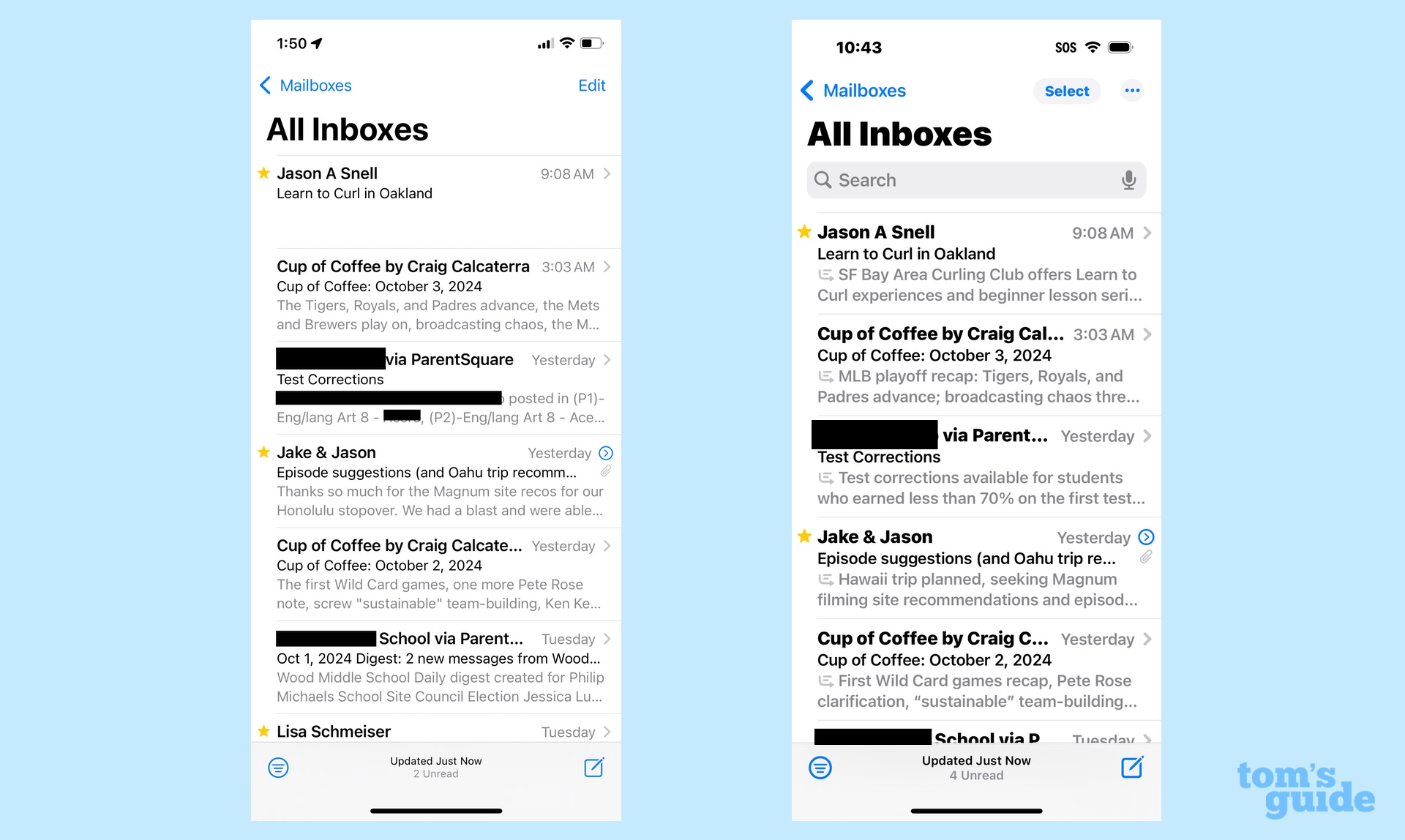

Summaries in Mail's inbox

We've thrown some roses in Mail's direction, now let's lob a lone brick at an Apple Intelligence-fueled change. In the Mail inbox, each message used to include the first few lines of a message, which could sometimes be helpful in figuring out the contents of the email, but more often didn't tell you a lot of details. To fix that, Apple Intelligence will whip up a summary of the email instead, so that you can skim that in the inbox before tapping on a message to read it.

It's a good idea in theory, but it's not that well implemented in practice. Apple has limited the summaries to two lines, which rarely is enough space to fit in the most important parts of the summary. What ends up happening is the summary gets cut off mid-sentence, really defeating the purpose of the change. If Mail is going to summarize the contents of a letter in the inbox — and it should — Apple should set aside space for the full summary.

Type to Siri

In another example of a change where I like the idea but don't care for the implementation, let's look at Type to Siri. This feature is great for those times when you need to interact with the digital assistant, but you don't want to bark out commands. Maybe you're in a meeting or out in public or you just need to look up something that you'd rather not broadcast to the wider world. You can activate the Type to Siri feature, and an on-screen keyboard appears to tap out your query.

You launch Type to Siri by tap the bottom of your iPhone screen. And I mean the very bottom, pretty much as close to the bezel as you can get. That's not a lot of real estate to work with, and there's usually a 50-50 chance that my tap meant to bring up the keyboard won't do anything at all — or worse, that I'll end up interacting with some on-screen element.

I wish there was some corner of the screen I could swipe up from to launch Type to Siri, similar to how I can go to Control Center or Notification Center with a swipe. The current Type to Siri method is a little frustrating, though, and I'm not sure how much better it will get with more practice.

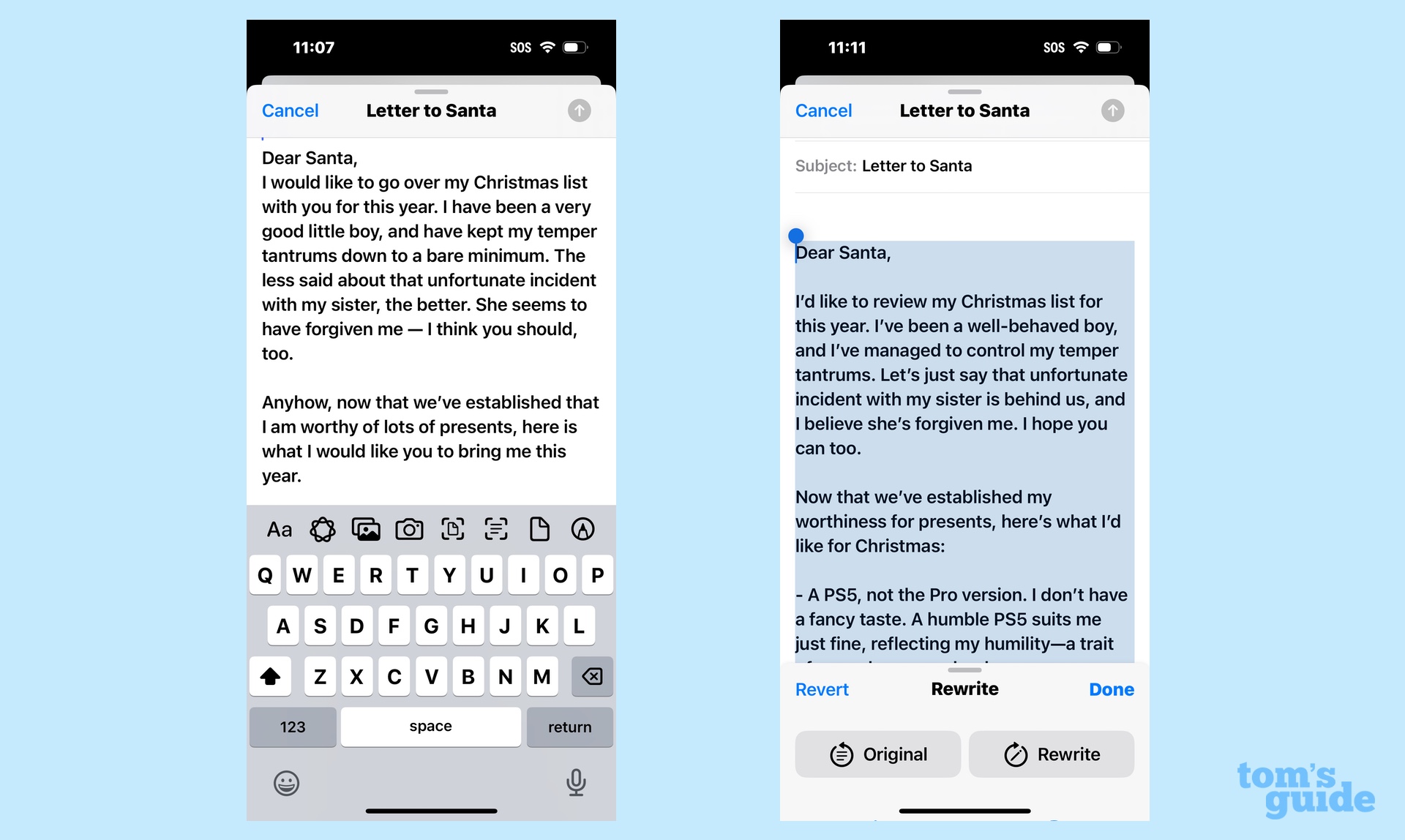

The Rewrite tool in Writing Tools

As noted above, I think the Professional button in Writing Tools does a great job sprucing up formal messages, and the Friendly tone may come in handy with making some mails comes off as less aggressive. The Concise tool is promising, though you need to be careful that Apple's AI isn't changing your meaning in the service of shortening your text.

The Rewrite tool? Well, that's just absolutely useless in my experience.

Chalk this up to the ego of someone who writes professionally if you want, but after trying out the Rewrite tool on multiple occasions, I just don't see what it brings to the table. For the most part, Rewrite seems to swap in the occasional synonym for one of the words you choose, whether the change improves things or not. At its worst, Rewrite sands off any personal writing style that may have been in your original text, producing something that reads like it was composed by a robot.

Rewrite is the very worst example of an AI-powered feature, and it will make your messages, notes and texts worse. Apple should just eighty-six it from Apple Intelligence at the earliest possible moment.

The updated Siri

With the understanding that the iOS 18.1 version of Siri that I'm using is just the first step in a revamp process that figures to last into 2025, I've been underwhelmed by the changes made to Apple's digital assistant thus far. There are things I like — when speaking to Siri, you can correct yourself without confusing the assistant, and as a person who occasionally gets tongue-tied and has a slight stammer, I appreciate that greater tolerance for the vagaries of human speech.

The updated Siri understands context, too, and I've found that to generally be an improvement over the old version of the assistant. I can ask Siri for the weather in Maui and get the current conditions before following up with a question like "And what will it be like next week?" Siri's smart enough to know I want a forecast for Maui for the coming days.

But that understanding can be limited. I asked Siri the score for last night's Dodger-Mets game and got a very handy box score. But the follow-up question of "who's pitching today?" produced a bunch of Safari links, not any information about the upcoming game. A second follow-up of "when does the game start?" had Siri asking me what sport I was talking about. If this is the smarter digital assistant, I'd hate to interact with the dumb one.

I expect Siri's understanding of context will get better over time. But I also would caution you about expecting a world of difference in your Siri interactions when you install iOS 18.1 on your iPhone this month.

More from Tom's Guide

- Apple Intelligence release date — here’s when all the AI features are coming

- Did Apple Intelligence just make Grammarly obsolete?

- iOS 18 Notes just got a major overhaul — here's how to use it

Philip Michaels is a Managing Editor at Tom's Guide. He's been covering personal technology since 1999 and was in the building when Steve Jobs showed off the iPhone for the first time. He's been evaluating smartphones since that first iPhone debuted in 2007, and he's been following phone carriers and smartphone plans since 2015. He has strong opinions about Apple, the Oakland Athletics, old movies and proper butchery techniques. Follow him at @PhilipMichaels.