iOS 18.4 adds a crucial Apple Intelligence feature to the iPhone 15 Pro — and it makes your phone more powerful

Here's how to set up Visual Intelligence on an iPhone 15 Pro — and what you can do with it

I have an iPhone 15 Pro in my possession, which means that I also have the ability to access most Apple Intelligence tools after Apple launched its suite of AI features with the iOS 18.1 release last fall. Most tools, but not all.

When Apple launched the iPhone 16 lineup last year, it also announced a new feature called Visual Intelligence. With the Camera Control button on those iPhone 16 models, you could summon Apple's answer to Google Lens and get more information and even a handful of actionable commands based on whatever it was you were pointing your phone's camera at.

Though the iPhone 15 Pro and iPhone 15 Pro Max have enough RAM and processing power to run Apple Intelligence features, Visual Intelligence has not been one of them. That means someone's $799 iPhone 16 could do something the phone you paid at least $999 for couldn't And when the $599 iPhone 16e debuted last month, we learned that it, too, could access Apple Intelligence while iPhone 15 Pro and Pro Max owners remained shut out. Why, the very idea!

That's changed, though, with the arrival of the second public beta of iOS 18.4. If you're trying out that beta on an iPhone 15 Pro, you've now gained the ability to run Visual Intelligence. And while that's not necessarily a game-changing decision, it does give your older iPhone new powers it didn't have previously. And some of those powers are proving to be quite useful.

Here's a quick rundown of how iPhone 15 Pro and iPhone 15 Pro Max users can set up their devices to take advantage of Visual Intelligence, along with a reminder of just what you can use that AI-powered feature to do.

How to set up Visual Intelligence on an iPhone 15 Pro in iOS 18.4

If you want to use Visual Intelligence on your iPhone 15 Pro, you'll need to find a way to launch the feature since only iPhone 16 models come with a Camera Control button. Fortunately, you've got two other options, thanks to the iOS 18.4 update.

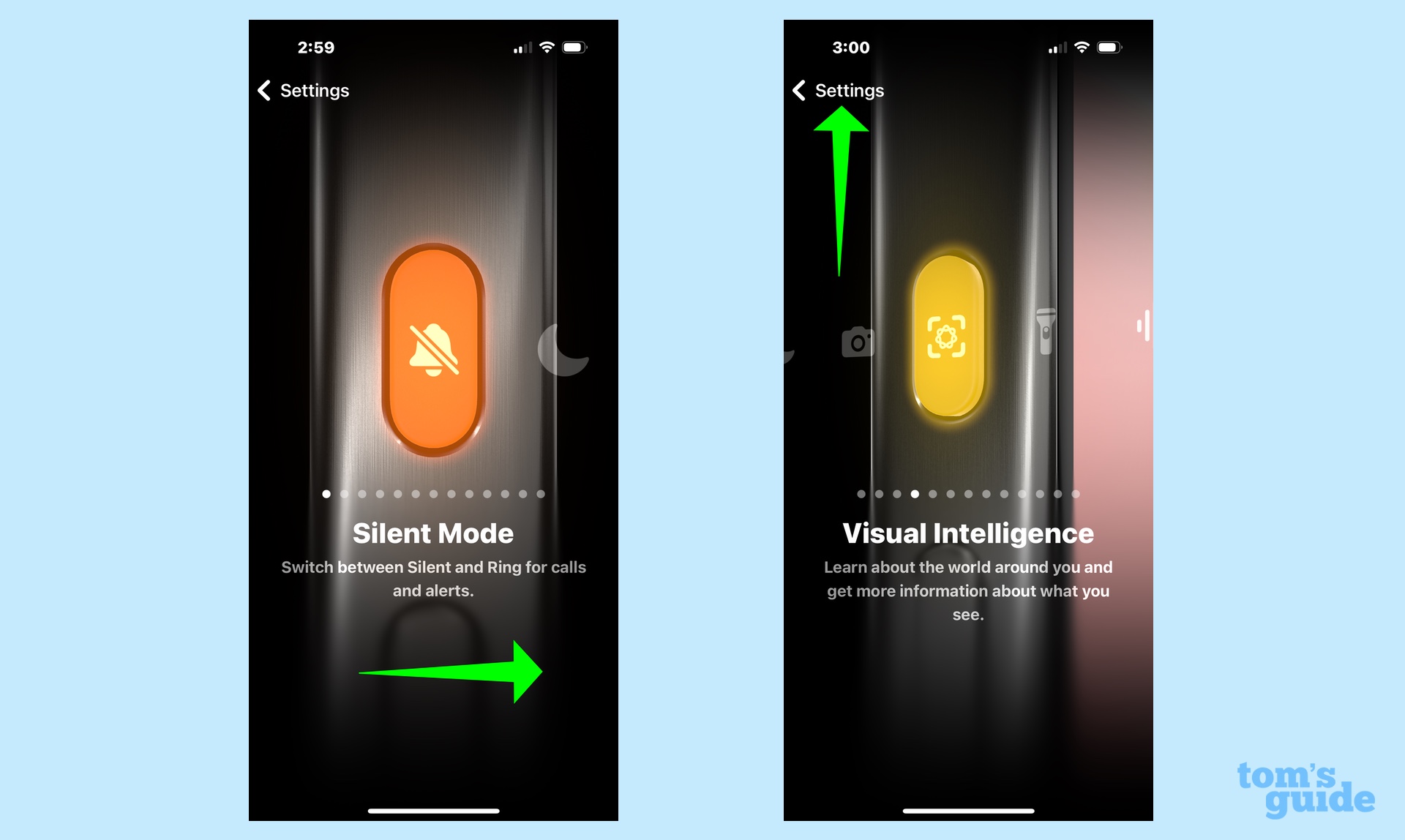

iOS 18.4 adds Visual Intelligence as an option for the Action button, so you can use that button on the left side of your iPhone to trigger Visual Intelligence. Here's how.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

1. Go into the Action Button menu in Settings.

Launch the Settings app, and on the main screen, tap on Action Button.

2. Select Visual Intelligence as your action

You'll see a list of possible shortcuts to trigger with the Action button. Scroll through by swiping until you see Visual Intelligence. Tap on Settings to exit.

From that point, whenever you press and hold the Action button, it will launch Visual Intelligence.

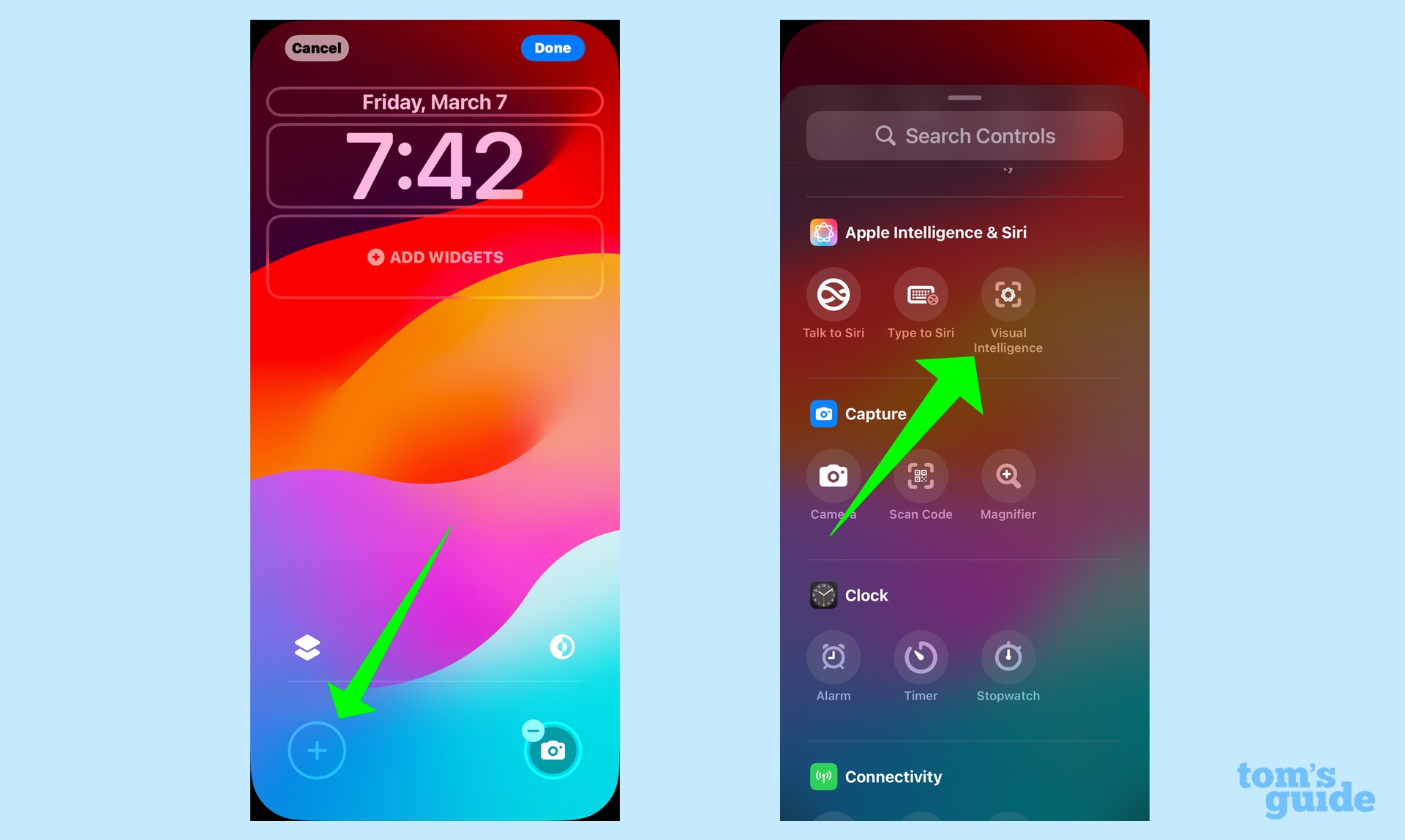

If you don't want to tie up your Action button with Visual Intelligence, you can also use the ability to customize the lock screen shortcuts in iOS 18 to add a Visual Intelligence control. The iOS 18.4 adds a Visual Intelligence control that you can place on the bottom of your lock screen.

To add that control, simply edit your screen and select the control you want to customize. (In the screen above, we're putting it on the bottom left corner.) Select Visual Intelligence from the available options — you'll find it under Apple Intelligence & Siri controls though you can also use the Search bar at the top of the screen to track down the control. Tap the icon to add it to your lock screen.

What you can do with Visual Intelligence

So your iPhone 15 Pro is now set up to launch Visual Intelligence, either from the Action button or a lock screen shortcut. What can you do with this feature?

Essentially, Visual Intelligence turns the iPhone's camera into a search tool. We have step-by-step instructions on how to use Visual Intelligence, but if your experience is like mine, you'll find things very intuitive.

Once Visual Intelligence launches, point your camera at the thing you want to look up — it could be a business's sign, a poster or just about anything. The iOS 18.3 update that arrived last month added the ability to identify plants and animals, for example.

The information that appears on your screen varies depending on what you point at. A restaurant facade might produce the hours of the place is open, while you can also collect phone numbers and URLs by capturing them with Visual Intelligence.

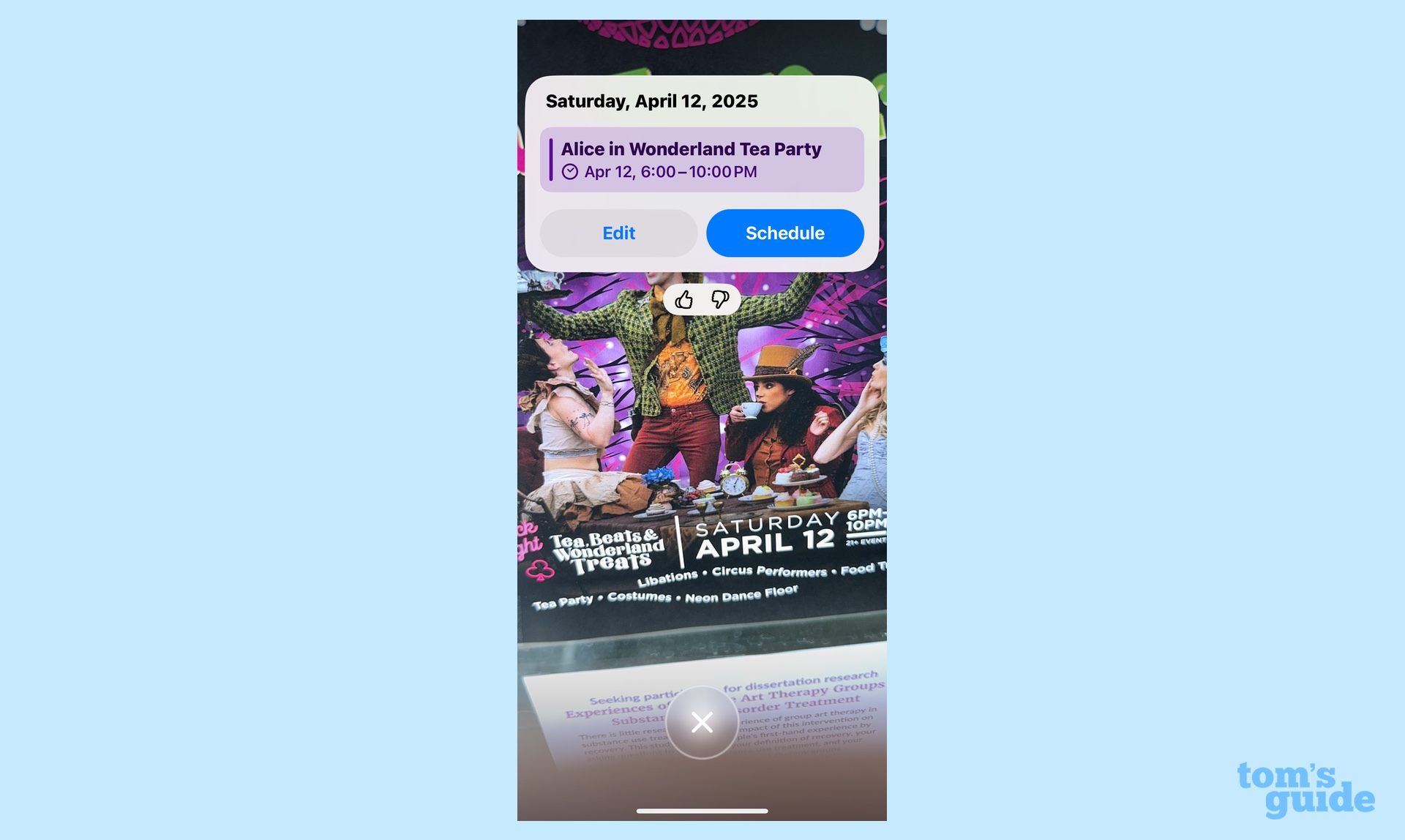

I captured a poster of an upcoming event with my iPhone 15 Pro, and Visual Intelligence gave me the option of creating a calendar event with the date and time already filled in. It would be nice if the location were copied over, too, since that information was also on the poster, but we'll chalk that up to this being early days for Visual Intelligence.

Visual Intelligence can also get flummoxed in situations like these. When I tried to add a specific soccer match to my calendar from a schedule listing multiple dates, Visual Intelligence got confused as to which one to pick. (It seems to default to the date at the top of the list.) Having to edit incorrect data defeats most of the purpose of this particular capability, but you'd expect Apple to expand Visual Intelligence's bag of tricks over time.

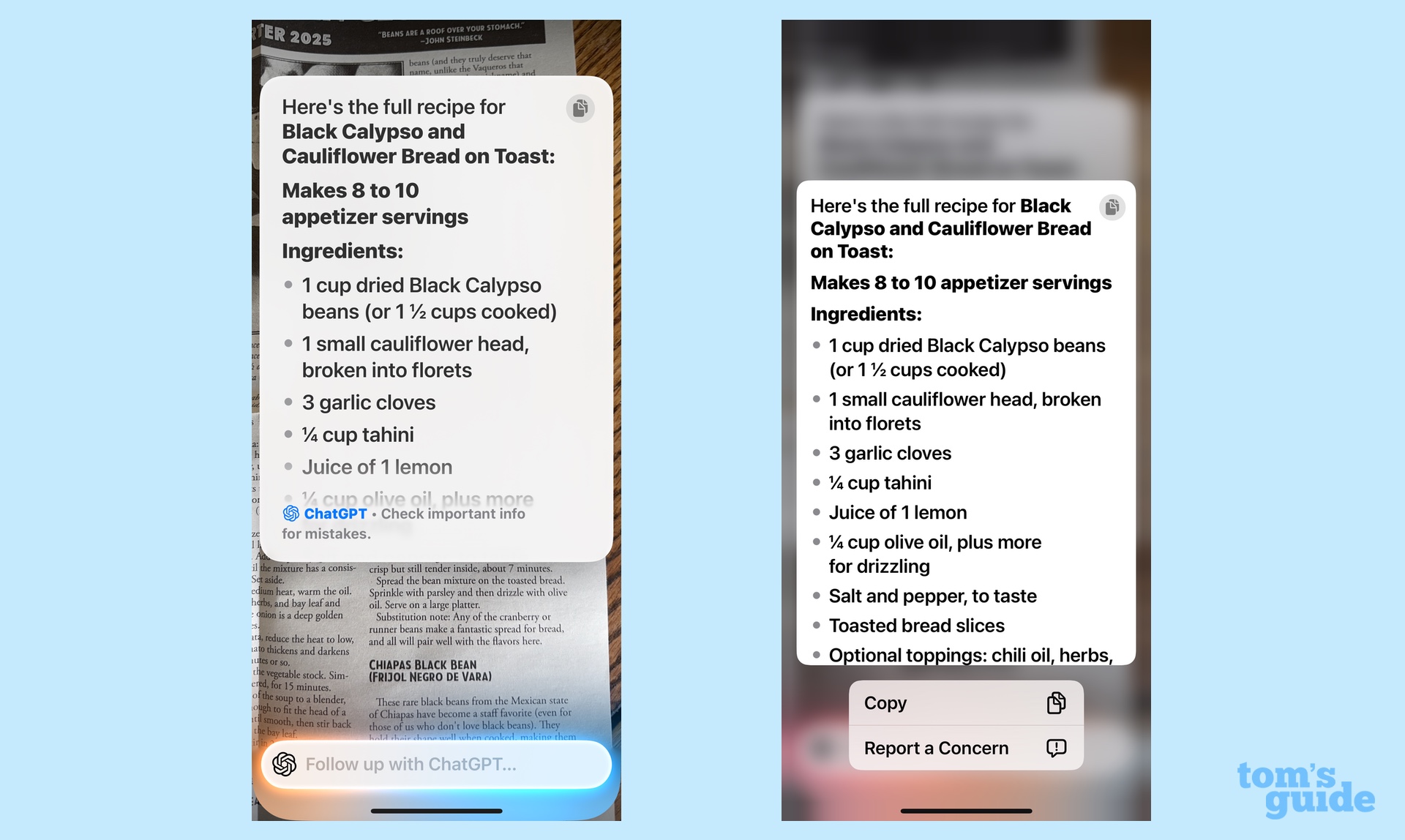

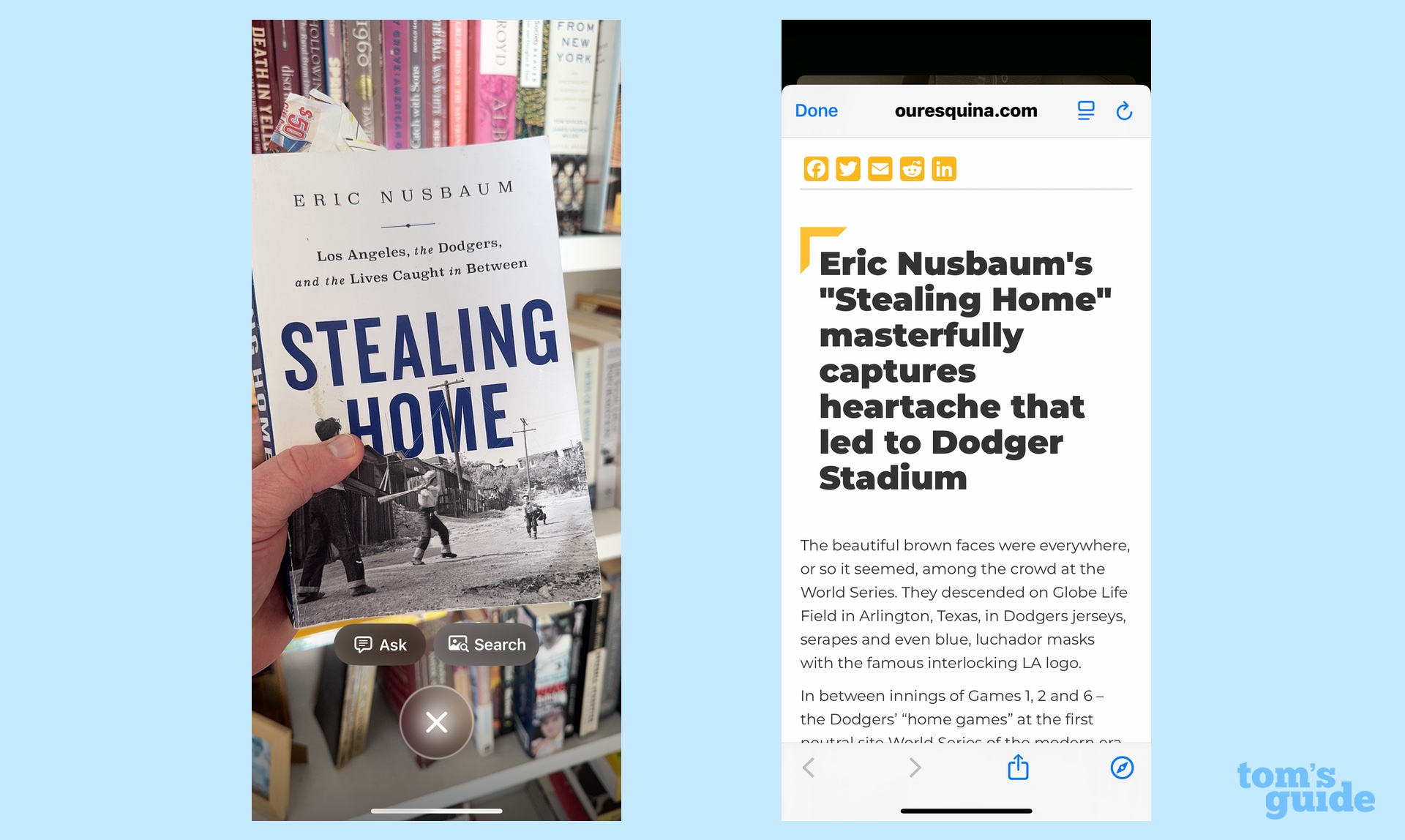

You have two other options for expanding on the info Visual Intelligence gives you. If you've enabled Chat GPT in Apple Intelligence, you can share the information with ChatGPT by selecting the Ask button, or you can tap Search to run a Google search on the image you've collected.

Of those two options, ChatGPT seems to be the more fully featured in my experience. When I captured a recipe for a bean dip, ChatGPT initially summarized the article, but by asking a follow-up question, I could get the chatbot to list the ingredients and the steps, which I could then copy and paste into the Notes app on my own. To me, that's a lot more handy than having to pinch and zoom on a photo of a recipe or, worse, transcribing things myself.

Google searches of Visual Intelligence image captures can be a lot more hit and miss. A photo of a restaurant marquee near me produced search results with similarly named restaurants, but not the actual restaurant I was in front of.

Google Search did a much better job when I took a picture of a book cover via Visual Intelligence, and the subsequent search results produced reviews of the book from various sites. That could really be useful the next time I'm in a book store — it's a place that sells printed volumes, youngsters, ask your parents — and want to know if the book I'm thinking of buying is actually as good as its cover.

That's been my experience with Visual Intelligence so far, but my colleagues have been using it since it came out last year as everything from a virtual guide in an art museum to a navigation tool for getting out of a corn maze. If you've got an iPhone 15 Pro, you can now try out your own uses for Visual Intelligence.

More from Tom's Guide

Philip Michaels is a Managing Editor at Tom's Guide. He's been covering personal technology since 1999 and was in the building when Steve Jobs showed off the iPhone for the first time. He's been evaluating smartphones since that first iPhone debuted in 2007, and he's been following phone carriers and smartphone plans since 2015. He has strong opinions about Apple, the Oakland Athletics, old movies and proper butchery techniques. Follow him at @PhilipMichaels.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.