Apple announces details about its new AI models — this is a game-changer for the industry

This is how it compares to its rivals

You’ve probably seen headlines mentioning Apple Intelligence after Apple’s annual WWDC event for developers, but what exactly is Apple Intelligence made of?

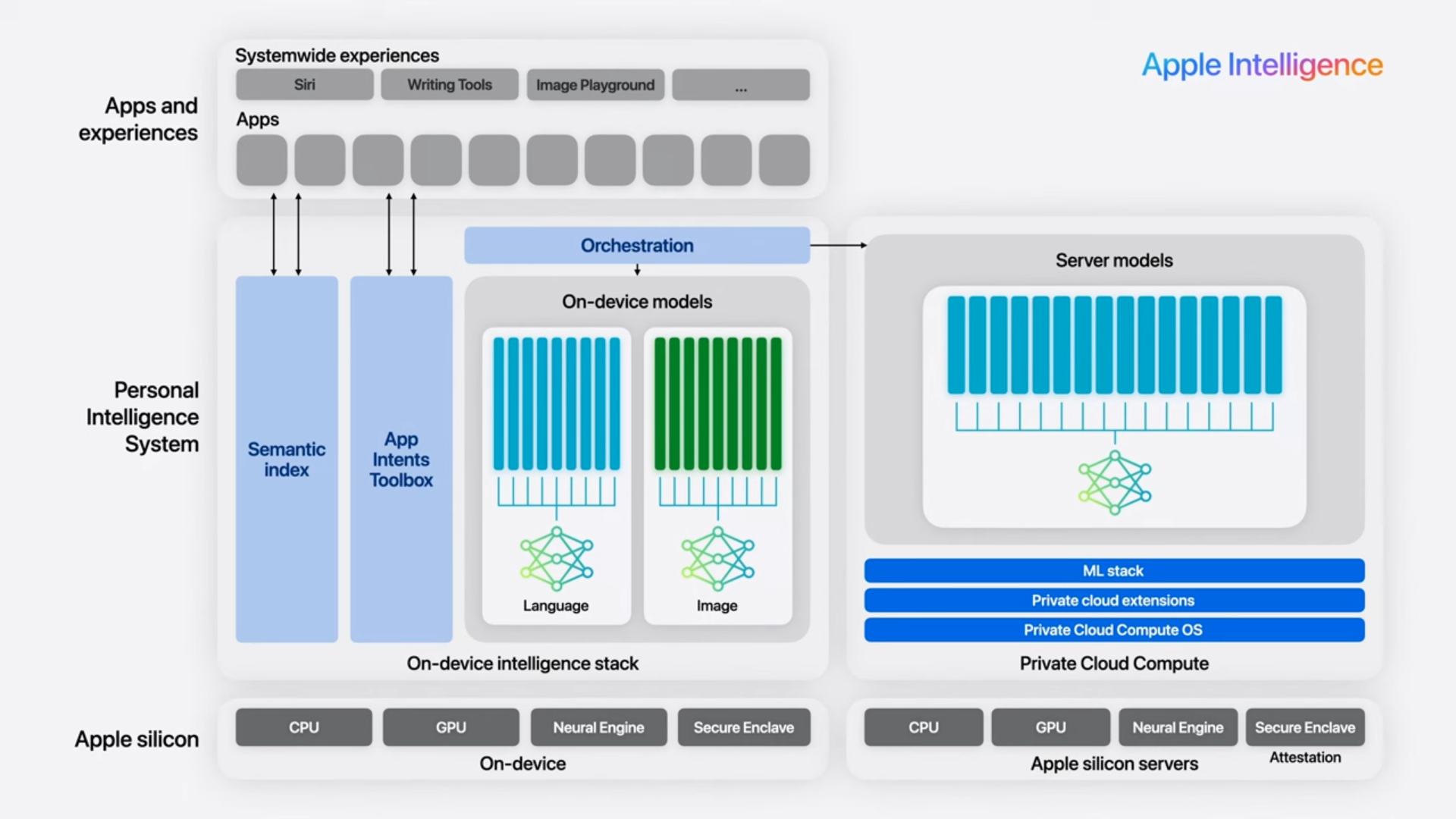

Apple Intelligence is the new "personal intelligence system" (until now the rest of us have been calling this artificial intelligence) that puts powerful generative models at the heart of your Apple devices. A beta version is set to become available this Fall.

In a blog post, Apple said Apple Intelligence is made of different generative models that adapt to a user’s everyday tasks.

Whether that’s writing or improving text, prioritizing notifications, or creating images for your conversations with friends Apple will apply the model that’s most specialized to whatever it is you want to achieve.

The system includes an on-device model with around 3 billion parameters and a larger server-based language model for more complex tasks.

How Apple Intelligence works

Sure, having a massive large language model (LLM) on your iPhone would be useful, but the limits of our current technology mean your battery and storage would be drained relatively quickly, hence the need for a second leaner model.

For instance, the on-device model has a vocabulary size of 49,000 as opposed to the 100,000 vocabulary size of the server model — this is similar in size to the vocabulary of ChatGPT.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Yesterday Apple strongly advocated for on-device AI with @Apple Intelligence! Today, we know they will run ~3B LLM on device (Mac, iPhone, iPad) using fine-tuned LoRA Adapters for different tasks, claiming to outperform other 7B and 3B LLMs! 👀What we know:📱 On-device 3B… pic.twitter.com/i8Da48XAA3June 11, 2024

Apple VP of Intelligent System Experience Engineering, Sebastien Marineau-Mes, said they'd pushed the limits of what could be run locally with the on-device models.

The aim was to ensure it was "powerful enough for experience we wanted, yet small enough to run on device." This involved creating a large model then fine-tuning it over and again to train for niche tasks.

They then developed a new technique called Adapters that sit on top of the common foundation model, allowing it to specialize on the fly to the task at hand.

As well as the language model they have a diffusion model to provide image generation for the Genmojis and image creation.

How Apple Intelligence compares

Apple compared its generative models to others on the market and it said it focused on human evaluation rather than machine testings, as it thinks it would correlate better to an actual user’s experience.

In a test on general capabilities, which looked into things like brainstorming, mathematical reasoning, and answering open questions Apple’s on-device model performed better than the lightweight open model Microsoft Phi-3-mini winning 43% of the tests compared to Phi-3-mini’s 32.4%.

However, GPT-4-Turbo outperformed Apple’s server-based model winning 41.7% of tests compared to Apple’s 28.5%. The latter two models were tied 29.8% of the time.

In terms of safety, both Apple’s models outperformed its competitors during tests on harmful content, sensitive topics, and factuality. For example, the model running on Apple servers had a violation rate of 6.6% compared to GPT-4-Turbo’s 20.1%, where a lower score indicates better performance.

Apple said it trained its models on licensed and publicly available data. It also said the company does not use our users' private personal data or user interactions when training its foundation models.

More from Tom's Guide

- Apple WWDC 2024 announcements: Apple Intelligence, iOS 18, iPadOS, Siri 2.0 and more

- Apple's integration with ChatGPT is just the beginning — Google Gemini is coming and maybe an AI App Store

- Siri just got a huge boost with Apple Intelligence — here’s everything it can do now

Christoph Schwaiger is a journalist who mainly covers technology, science, and current affairs. His stories have appeared in Tom's Guide, New Scientist, Live Science, and other established publications. Always up for joining a good discussion, Christoph enjoys speaking at events or to other journalists and has appeared on LBC and Times Radio among other outlets. He believes in giving back to the community and has served on different consultative councils. He was also a National President for Junior Chamber International (JCI), a global organization founded in the USA. You can follow him on Twitter @cschwaigermt.