AI companies accused of scanning YouTube data without permission — here's what we know

Leading AI labs and big tech companies have been accused of using captions from tens of thousands of YouTube videos without permission to train artificial intelligence models.

Google has strict rules in place banning the harvesting of material from YouTube without permission. A new investigation by Proof News claims several big tech companies used the subtitles from more than 170,000 videos.

The captions were part of 'the Pile', a massive dataset compiled by the non-profit EleutherAI. Originally intended to give smaller companies and individuals a quick way to train their models, big tech and AI companies have also adopted this vast reservoir of information.

Apple was originally in the list of companies claimed to have used the Pile but it has since refuted these claims. Apple says it is committed to respecting creator and publisher rights and offers an opt out of being used in training for Apple Intelligence.

A thirst for data

Several studies have now found that two things are essential in making more advanced AI models — data and computing power.

Increasing one or both leads to better responses, improved performance and scale. But data is an increasingly scarce and expensive commodity.

There are several lawsuits against AI image and music generation companies underway at the moment over whether there is a copyright fair use for training data.

Companies like OpenAI and Google have a combination of their own massive data repositories and deals with major publishing companies or Reddit.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Meta has Facebook, Instagram, Threads and WhatsApp — although it is facing pushback from users. Apple has a vast amount of user data but its own privacy policies makes this less useful in initial model training.

This lack of available data is leading companies to look for new sources of information to train next-generation models and not all of those sources are willing to part with data, or even aware that the information they’re creating is being used to train AI.

There are several lawsuits against AI image and music generation companies underway at the moment over whether there is a copyright fair use for training data.

What went wrong?

While the AI companies are not directly responsible for the use of these YouTube captions in their model training dataset, the inclusion does raise questions about data provenance and just how hard big tech is checking when assessing rights.

It wasn’t just small creator videos included. The BBC, NPR, Wall Street Journal, Mr Beast and Marques Brownlee all had videos in the dataset.

Dave Wiskus, CEO of Nebula described it as "theft" and "disrespectful" to use data without consent, especially as studios are already using genative AI to "replace as many of the artists" as they can.

A total of 48,000 channels and 173,536 videos were in the YouTube Subtitles dataset. Some of the videos included conspiracy theories and parody which could impact the integrity of the final model.

This isn't the first time YouTube has been at the center of an AI training data controversy, with OpenAI CTO Mira Murati unable to confirm or deny whether YouTube was used in training their advanced — but as yet unreleased — AI video model Sora.

Speaking to Wired, Dave Wiskus, CEO of Nebula described it as "theft" and "disrespectful" to use data without consent, especially as studios are already using genative AI to "replace as many of the artists" as they can.

Anthropic said in a statement to Ars Technica that the Pile is just a small subset of YouTube subtitles and that YouTube's terms only cover direct use of its platform. This is distinct from the use of the Pile dataset. "On the point about potential violations of YouTube’s terms of service, we’d have to refer you to The Pile authors."

What will happen to the AI?

Google says it has taken action over the years to prevent abuse but has given no additional detail of what that might be or even whether this violates the terms.

However, Google isn't entirely blameless having been caught out scanning user documents saved in Google Drive with its Gemini AI even when the user hasn't given permission.

Creators are annoyed at the discovery but with the question of data provenance and copyright when used in training models still very much up for debate — their likely only recourse is if Google decides it violates the YouTube terms.

This instance of potential misuse of data will likely be bundled into the wider story of whether training data is under fair use or requires specific licensing. I suspect we won't get a final decision on that for years.

More from Tom's Guide

- Apple is bringing iPhone Mirroring to macOS Sequoia — here’s what we know

- iOS 18 supported devices: Here are all the compatible iPhones

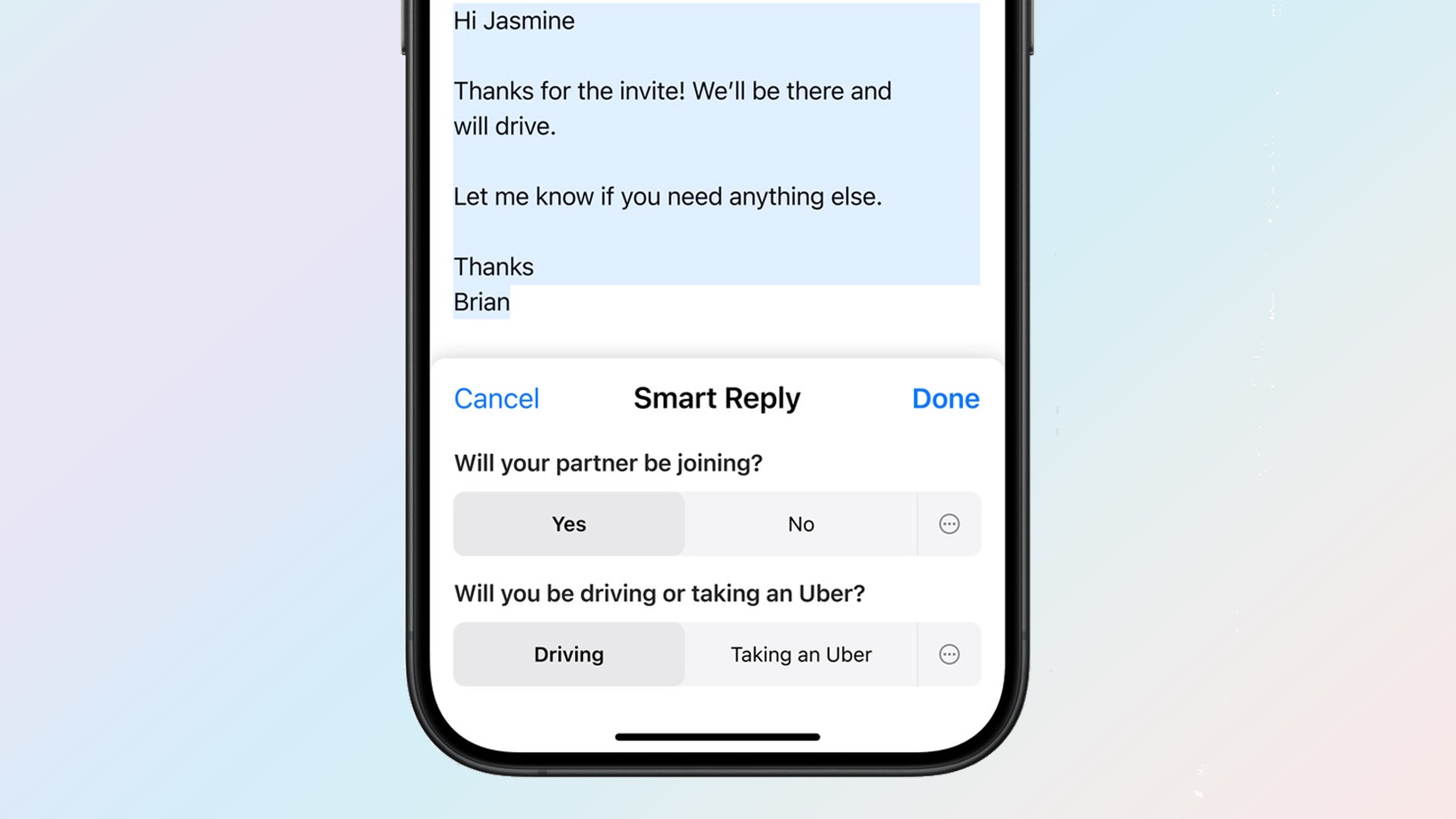

- Apple Intelligence unveiled — all the new AI features coming to iOS 18, iPadOS 18 and macOS Sequoia

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on AI and technology speak for him than engage in this self-aggrandising exercise. As the former AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover.

When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing.