YouTube will now help you spot AI in videos — here’s how

YouTube creators are going to have to disclose realistic-looking AI

In a world of misinformation, the idea that people could create realistic video fakes with the help of generative AI can be concerning. More so when those videos can easily spread on platforms like YouTube.

So it’s good to hear that YouTube will be enforcing new rules that mean creators have to disclose whether videos feature content that is “altered or synthetic” — which includes generative AI.

YouTube first announced this change back in November, noting that it would be introducing features to better inform viewers when they’re watching synthetic content. One of those features has now been added to the Creator Studio, so that uploads can be flagged for having generated content when they’re uploaded.

The idea here is that creators have to flag when they’re uploading realistic-looking content, that someone could mistake for genuine footage. So anything involving unrealistic video, animation or special effects is totally exempt — even if generative AI was involved in production.

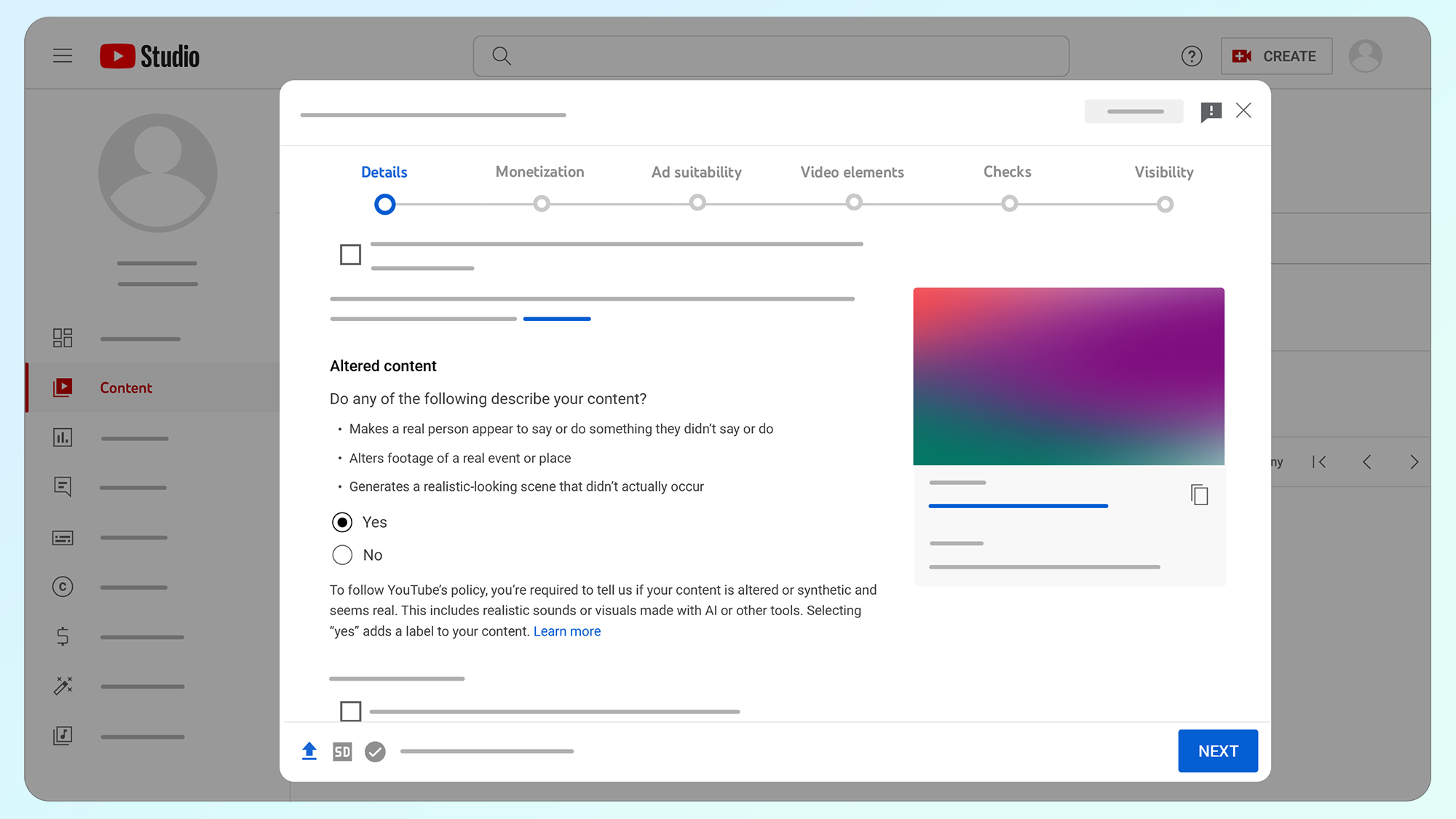

The flag is a simple yes/no option in the content settings, with the menu detailing the kind of things YouTube considers “altered or synthetic”. That includes whether a real person is saying or doing anything that never happened, footage of real events has been altered, or that the video features realistic-looking events that didn’t happen.

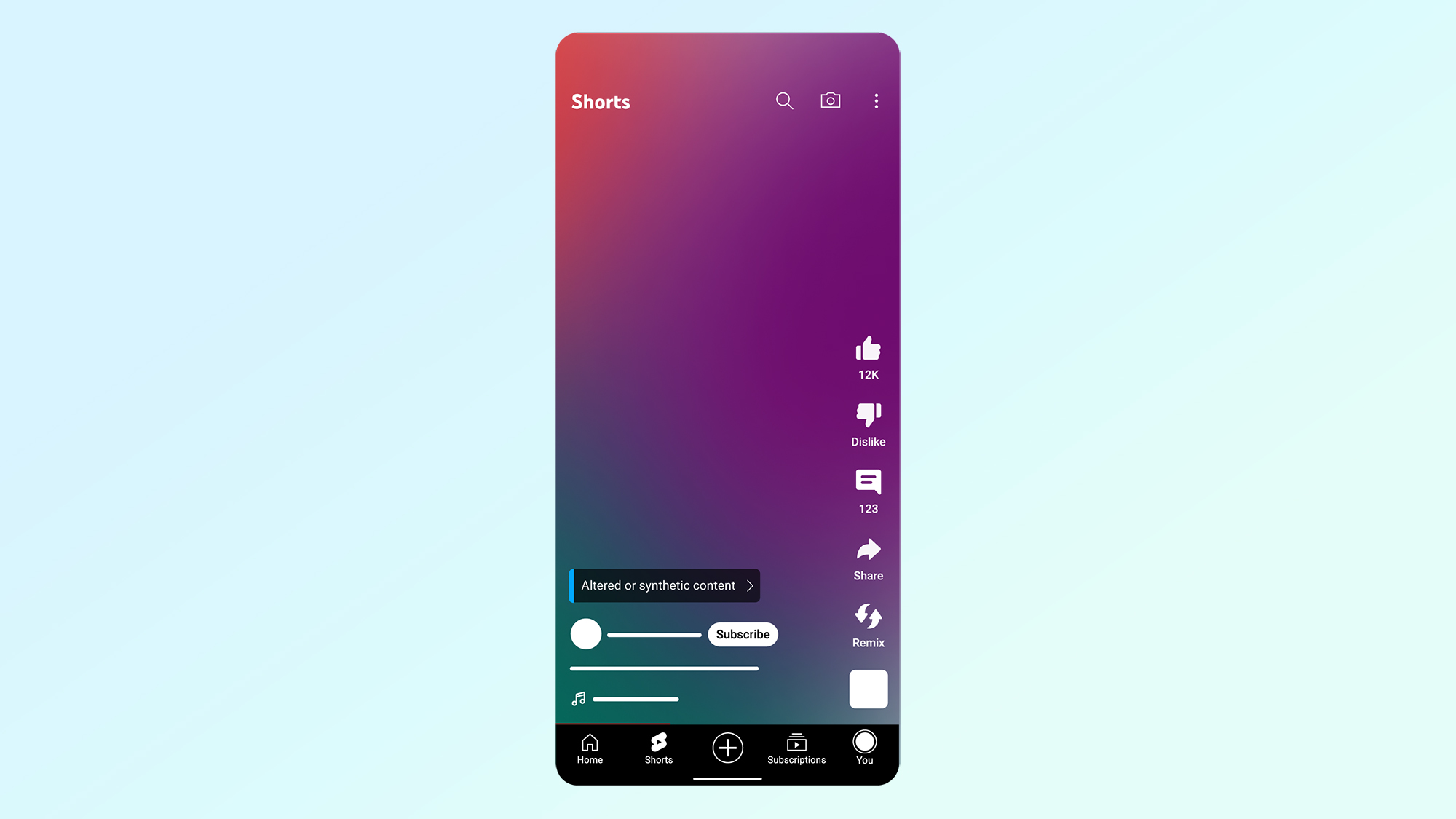

YouTube showed a couple of examples of how AI-infused videos will be labeled. One involves a tag above the name of the account pointing out that the video contains altered or synthetic content, while another adds a disclaimer in the video’s description.

YouTube explains that the more prominent label will be primarily used on videos covering “sensitive topics”, including healthcare, elections, finance and news. For others the description disclaimer will apparently suffice.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Enforcement measures haven’t been specifically outlined, and YouTube says it wants to give creators the time to adjust to the new rule. However it does mention that consistently failing to disclose AI-generated content may lead to YouTube adding those labels automatically — especially if content has the potential to mislead and confuse viewers.

The new labels will start rolling out “in the weeks ahead”, starting on the YouTube mobile app and later expanding to desktop and TV. So be sure to keep an eye out for those all-important labels, especially if you’re not sure how valid some content is. Some videos may not have the flag, but it pays to know what to look for considering how prominent AI misinformation is likely to become before the end of the year.

More from Tom's Guide

- Apple reveals MM1 AI model and it could power the new Siri 2.0

- Apple's road map for next 3 years just tipped by leaker — here's all the new products on the way

- Apple could bring Google Gemini to the iPhone for AI — this is huge

Tom is the Tom's Guide's UK Phones Editor, tackling the latest smartphone news and vocally expressing his opinions about upcoming features or changes. It's long way from his days as editor of Gizmodo UK, when pretty much everything was on the table. He’s usually found trying to squeeze another giant Lego set onto the shelf, draining very large cups of coffee, or complaining about how terrible his Smart TV is.