Meet ReelMagic — a new AI video tool for creating entire short films from a single prompt

I put it to the test

Generative AI video tools have reached a point where you can create five seconds of video almost indistinguishable from a human-filmed clip. However, consistency is still an issue if you want to make a short film, commercial, or music video as characters aren’t carried between clips.

It is possible to do this, but you need to use an image first and ensure consistency across every image you use in the video prompts. The first platform to tackle this was LTX Studio from Lightricks but it is limited to models in the platform. ReelMagic from Higgsfield changes that.

ReelMagic brings together the best AI content workflows into one platform, generating a script from your prompt, creating an image with custom characters for each shot and then allowing you to turn those images into videos and customize the story in a timeline view.

You can create a short film up to 10 minutes long and can pick between Recraft, Keyframe, Flux and Higgsfield Frame for the images and Runway Kling and MiniMax for creating video. You also have sound effects and voices powered by ElevenLabs and lip-syncing for the AI actors.

Putting ReelMagic to the test

ReelMagic plays on the fact every AI image or video model offers something different. Some are better at capturing emotion, others at ultra-realism. When I craft an AI video project manually I often have to use a combination of models to achieve the desired effect.

To make a 3-minute video with AI it can take the best part of a week if you include writing the script, planning the shots, and generating each image (remembering that often you’ll need to run the prompt multiple times to get the exact image you had in mind) and then generating the video clip from each image (again, running it numerous times due to any errors).

Then you need to move on to sound design once you’ve got the videos, crafting sound effects, atmosphere, music and voiceovers. Some shots will also require lip-synching. After watching it back I often find it is missing a shot, so will have to go back and repeat the process.

ReelMagic does all of that in one go from a single prompt. You get presented with a script that you can edit, a list of images that you can regenerate or replace and then a way to generate video for each shot, model-by-model or as a whole. It automatically adds sound effects which you can also adapt to your own needs.

Higgsfield, creator of ReelMagic, wrote that it “enables creators to edit specific elements without affecting the look/feel of their overall story.” My favorite feature is being able to define and design characters once and have them change throughout the project.

Creating a story with ReelMagic

This is the final video produced through the process outlined below. Total time from initial prompt to exported video was about 35 minutes.

I gave ReelMagic this prompt:

“The night is when they come out, the shadow creatures born of our hate. The stuff of nightmares that haunt our dreams and whisper words of destruction and despair into the ears of the powerful. Nobody knows where they came from, nobody has ever seen them, but they are always there and have always been there since the dawn of human civilization. Some say they were the first species on Earth — the spirits carried on the stellar winds from a long-dead world. Others say they are figments of our ancestors that couldn't let go. All we know is ... they are getting stronger.”

I then had to select a "style". This could be inspired by an existing show, such as Wednesday, or a custom concept. I went with the original as I wanted to see what the AI came up with.

After about three minutes I was presented with a screenplay and a cast of characters and a location list that I could adapt, redesign, assign a voice to, or change to fit my idea.

As I was doing this to test the platform I went with all the settings as offered, including the screenplay. I didn’t change anything as I wanted to see what the AI came up with on its own. The idea is to see how well it can craft a full project from a single prompt.

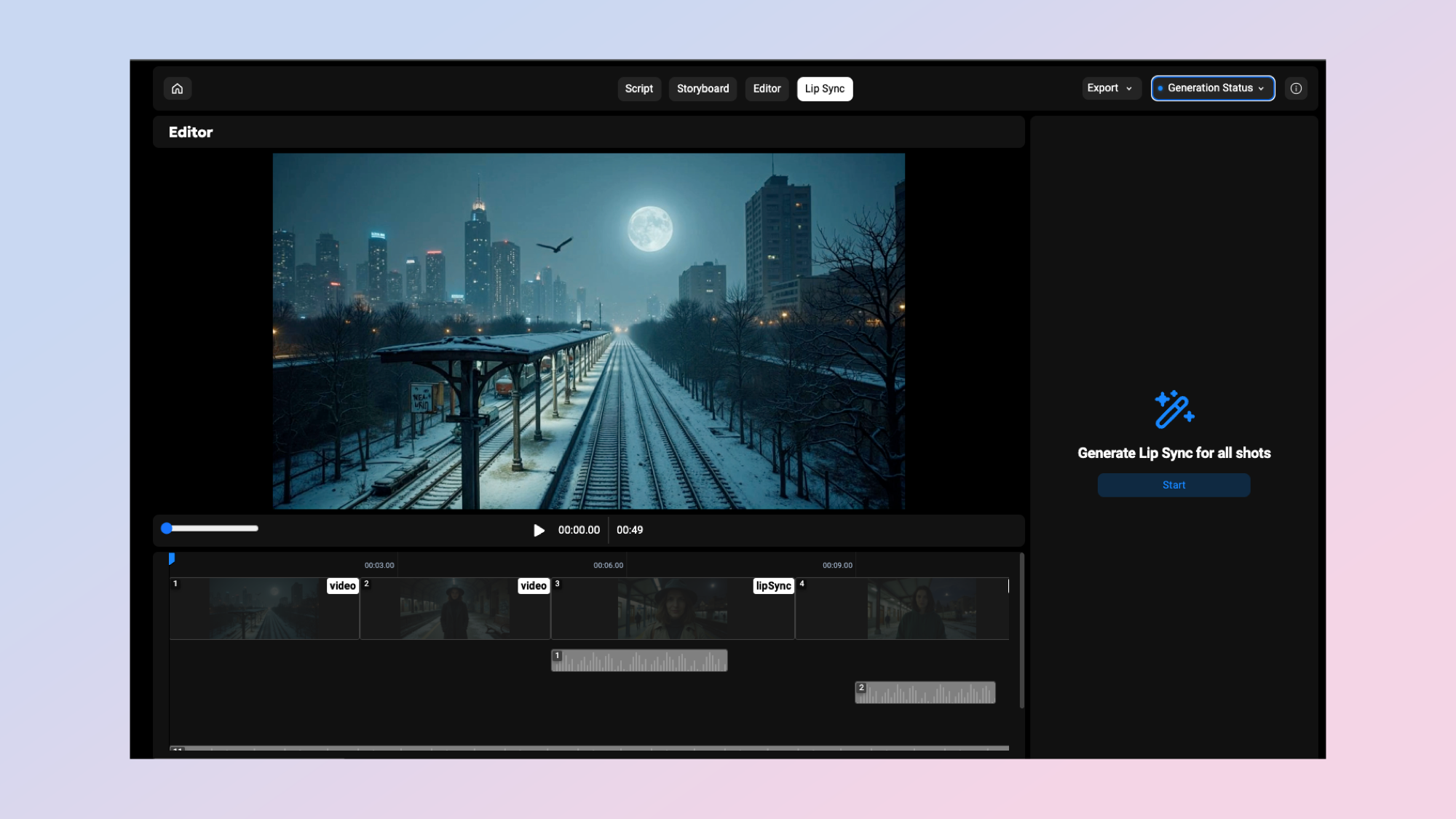

The storyboard view lets you review all the generated shots and make any appropriate changes. This includes regenerating a single shot with a different image model, swapping faces if it doesn't match your vision and generating the video.

You can then go into Editor view. This is like a typical video editing platform where the individual clips are placed one after the other on a timeline, complete with sound effects and speech. Once you've generated videos you can also have each face view lip-synced to the generated voice.

I decided to have it generated using the same model for all clips — Kling 1.5 Pro — as it is a good all-rounder. It is also the slowest of all the models on offer, so this article took a little longer than I was hoping.

How it worked out

You can see the final output above in the YouTube video, complete with voice-over, lip-sync and sound effects. I also made a short, 30-second example of how I might have handled the opening scene using my normal workflow in Pika 2.0 (below).

Considering the process is largely automated, the final output was closer to something I’d have created manually than I expected.

I wouldn’t have taken the story as written because it was a little on the basic side, and I’d have changed up the characters, added more variety to the shots and included more subtlety, but for a one-shot attempt — it wasn’t bad.

The true value is in how easy it is to change any single element within the process. This makes it a production tool as much as a way to create a longer-form AI video from a single prompt. I can use it to storyboard an idea, rapidly iterate over changes and then generate in one place across different models.

This is an example of where I think the next generation of AI tools will go — productization. Models are improving at a rapid rate so the next logical step is creating AI-powered tools that you can use to generate a project, rather than a single clip.

More from Tom's Guide

- I got early access to LTX Studio to make AI short films

- I just tried the new Assistive AI video tool — and its realism is incredible

- Meet LTX Studio — I just saw the future of AI video tools that can help create full-length movies

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?