I made my Superman action figure talk with Pika Labs’ new AI lip sync tool — watch this

Make your characters talk

Artificial intelligence video startup Pika Labs has a feature that lets you give your generated characters a voice. Lip Sync moves the lips of a humanoid character in a video or image to a sound file or text and then turns it into a new video. It even works with action figures.

This is one of a number of solutions designed to tackle one of the biggest issues with generative artificial intelligence video at the moment — the lack of interactivity.

Most of the AI videos circulating on the web and social media are largely visual slideshows with a voice over or used to make music videos with no dialogue.

That isn’t to say dialogue doesn’t exist, and it is an interesting form of storytelling but it lacks the natural feel of a movie or TV show where characters speak to each other or to the camera.

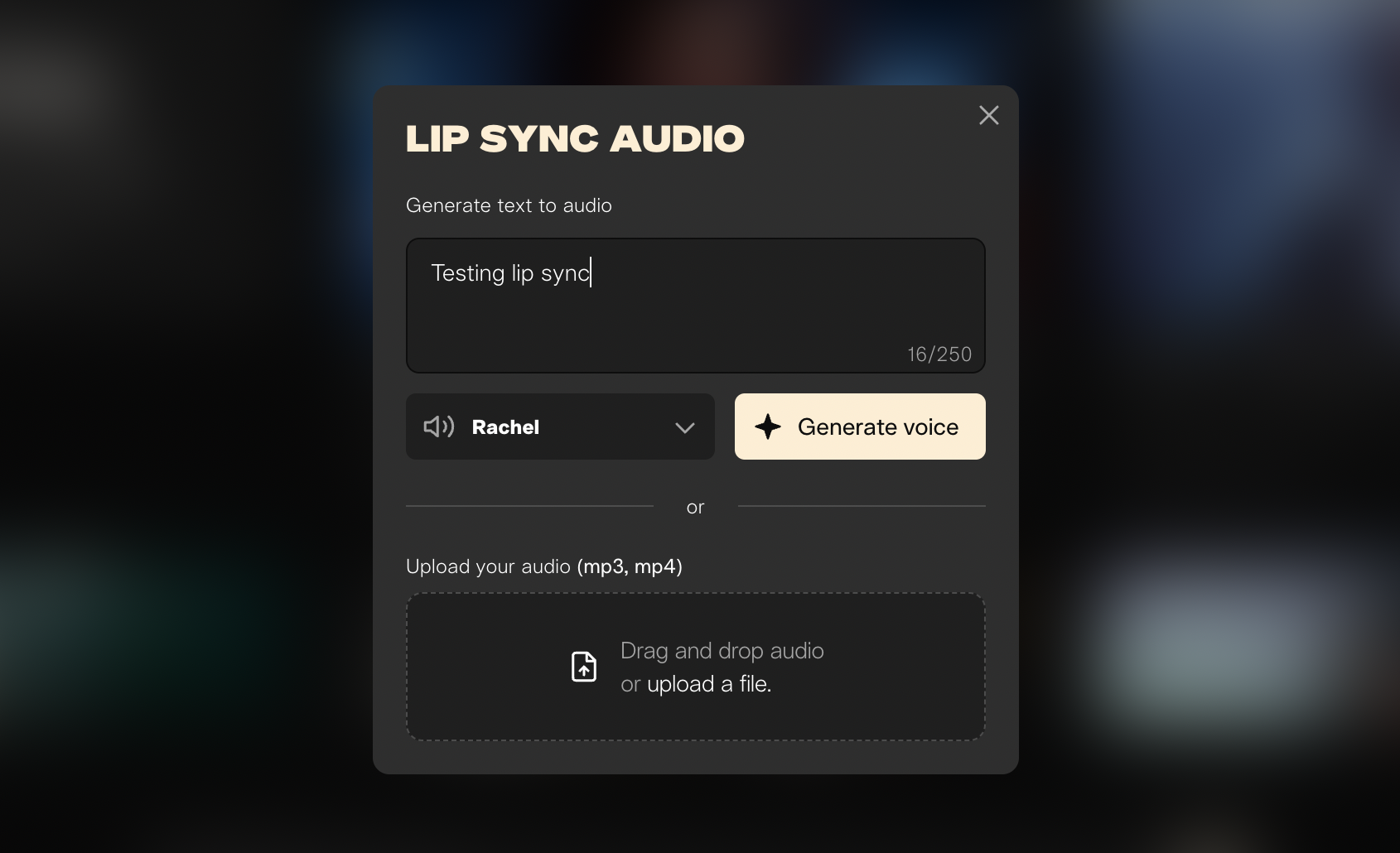

Pika Labs lets you upload a video or a still image and pair it to a sound. It then specifically animated the lips in time with the dialogue in the audio clip to make it appear like speech.

Testing Pika Labs lip sync

Lip Sync works from an uploaded audio file, that could be from a podcast, audiobook or generated using a text-to-speech engine like ElevenLabs or Amazon Polly.

However, if you don’t have an audio file then Pika Labs has integrated ElevenLabs API into the process, allowing you to select from any of its default voices and just type what you want the character to say.

To get a feel for just how well the new lip sync feature works I asked Claude 3 to create five characters and give each a line of dialogue. I then used Ideogram to visualize the characters and finally I put each of them through the Lip Sync tool.

There are several ways to approach this. You can provide Pika Labs with a pre-animated video file, an image or convert an image to a video then apply Lip Sync to the final video.

How well did it perform?

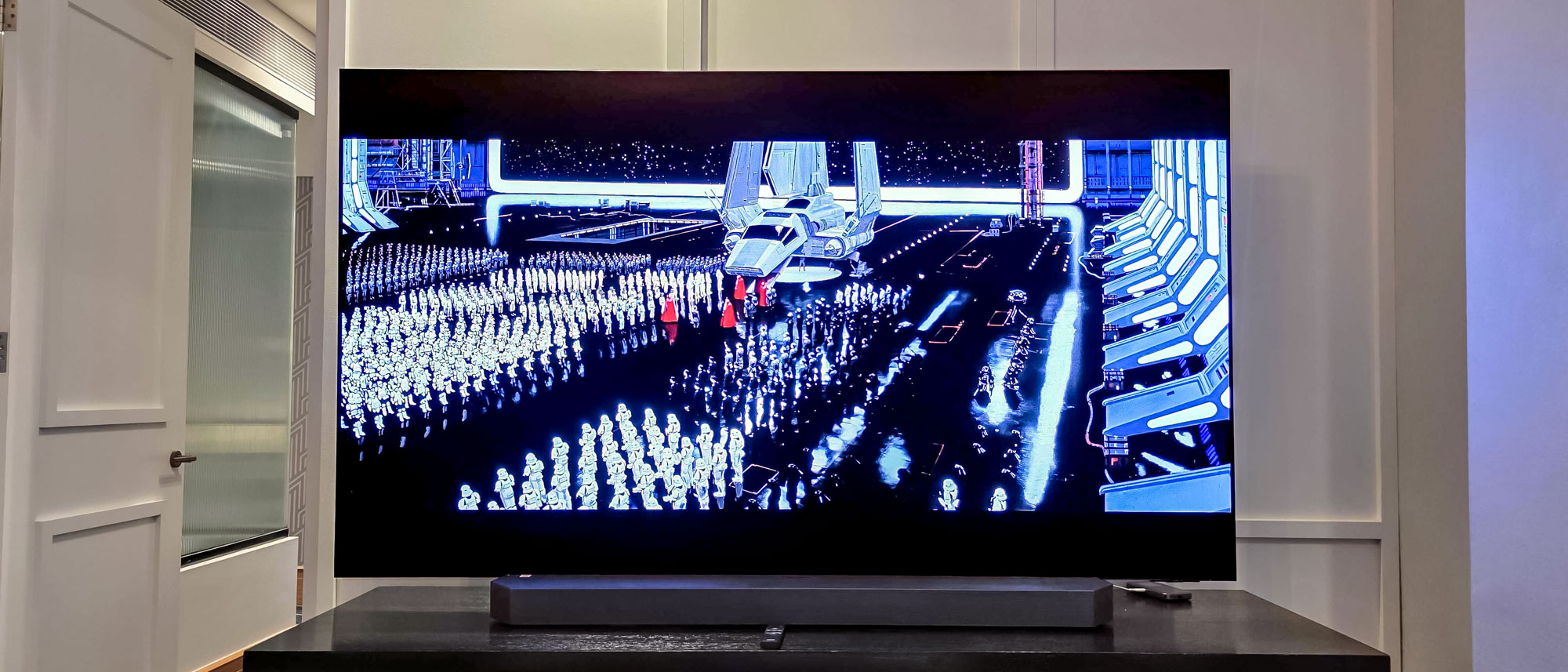

The first test I tried was giving it a short video I filmed of a Superman action figure I have on the shelf behind my desk. It worked perfectly, easily animating the mouth movement.

To test this even further I filmed a video of Black Widow and then one of Woody from Toy Story — which also worked well. Robot Chicken style shows can now be made at home.

Time to move on to the characters I created with Claude and Ideogram. The idea was to write a short script and have each character read a line, then edit them together.

I started by using the image and having it simply animate the lips — going from image to lip sync straight away, without creating a video first. While this worked well for the lip movement, it did look somewhat flat, like a talking photo rather than a video.

Overall I think Pika Labs has done an exceptional job with its lip sync tool. It creates captivating lip movement but does fall short on other facial animation if the video hasn't already been animated.

The first test was the android. It had blue skin and resembled a humanoid but not an actual human. This will push the tool which currently requires depictions of humans facing the camera to work effectively. It handled it well but with some artefacts around the lips.

I converted all five images into video using the usual Pika Labs image-to-video model and then applied lip sync to the resulting short clip. This worked better than going straight to image for all but the depiction of a human — Pika Labs kept over-animating his face, so I stuck with the still.

Bringing a new dimension to video

The final test was to see if I could make a character sing, basically giving it a song file from Suno. The issue is, it tends to be limited to a few seconds. So I had to pick the right clip and focused on the chorus. It worked really well and will make a good addition to my next music video project.

Overall I think Pika Labs has done an exceptional job with its lip sync tool. It creates captivating lip movement but does fall short on other facial animation if the video hasn't already been animated.

There are other services out there including Synclabs that will great longer videos, but the fact this is built into a generative video tool and integrates ElevenLabs gives it an edge

All Pika Labs needs now is a timeline tool to allow for video editing directly within the platform, as well as integration with a sound effects generator like AudioCradt and it will be on its way to being a full production level tool.

More from Tom's Guide

- Pika Labs new generative AI video tool unveiled — and it looks like a big deal

- I got access to Pika Labs new AI video tool and couldn't believe the quality of the videos it produced

- Runway vs Pika Labs — which is the best AI video tool?

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?