Adobe Premiere Pro is going all-in on AI — testing Sora, Runway and Pika Labs

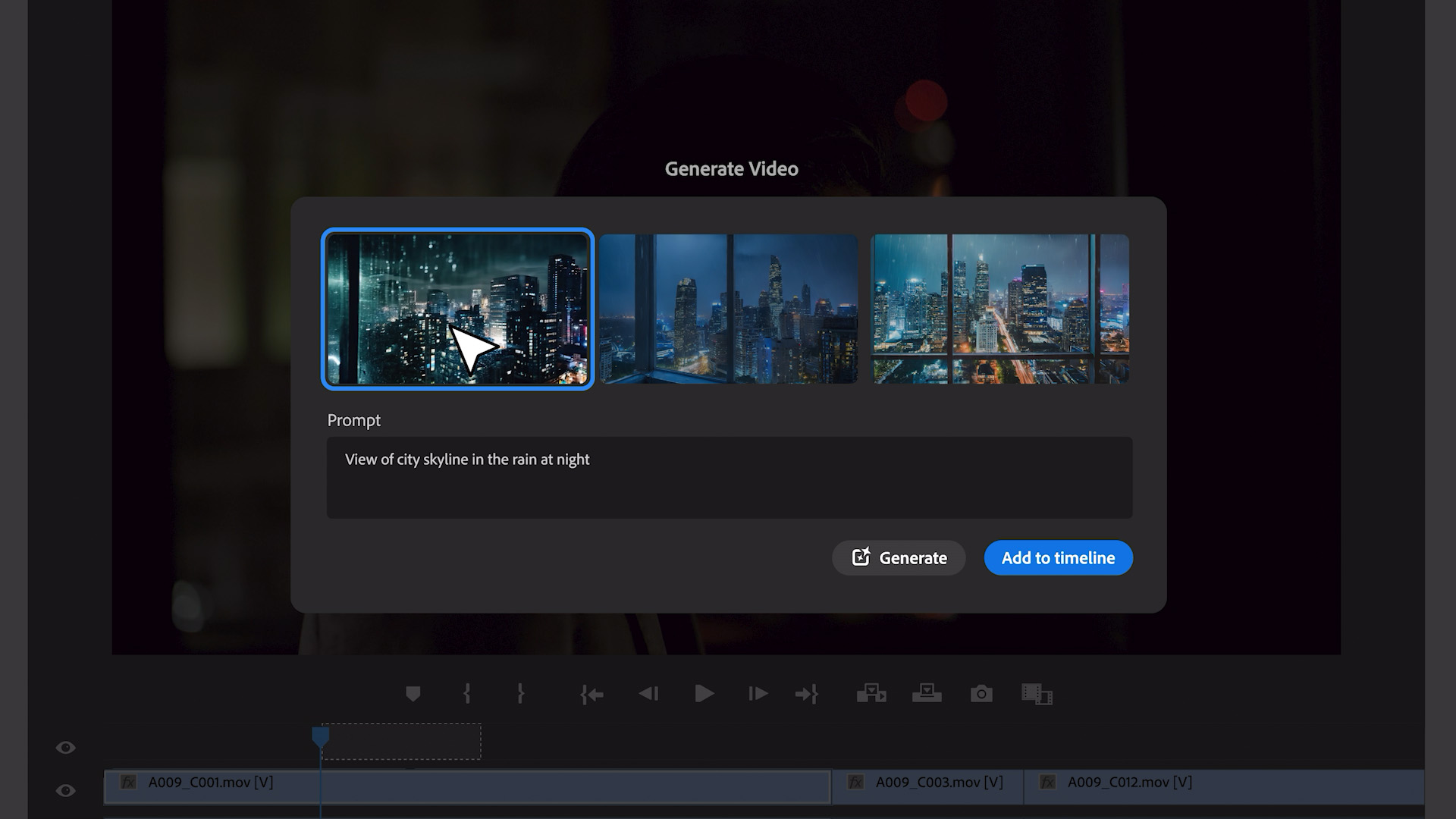

Make videos from text in Premiere Pro

Adobe is working on some impressive new AI video features for Premiere Pro, including integration with third-party services like OpenAI’s Sora, Runway and Pika Labs.

The update is currently only available internally for testing at Adobe with no timeline beyond “this year” for public release but it will include being able to use AI to extend a clip as well as add or remove any object in seconds from within a sequence.

While Adobe is experimenting with third-parties, the company says the core functionality will be delivered through a new Firefly Video generative AI model trained on licensed content.

“Adobe is reimagining every step of video creation and production workflow to give creators new power and flexibility to realize their vision,” said Ashley Still, SVP of creative products at Adobe.

Adobe says any AI generated video or video including AI elements will be labelled as such in both Premiere Pro and metadata.

Why is Adobe bringing Sora into Premiere Pro?

Since revealing Sora earlier this year, OpenAI has demonstrated the potential of AI video to not just create a short three second clip but a fully produced, multi-shot video from a text prompt. Runway and others are catching up fast.

Synthetic video models such as Sora, Runway and Pika Labs have been controversial among some creatives, with concerns over impact on jobs, provenance of training data and quality.

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

By bringing generative AI innovations deep into core Premiere Pro workflows, we are solving real pain points that video editors experience every day, while giving them more space to focus on their craft.

Ashley Still, SVP Adobe

However, this criticism hasn’t been universal with many seeing the use of generative AI as a way to enhance existing video production rather than replace it, even putting advanced graphics and visual effects in the hands of smaller creators in a way never previously possible.

Adobe wants to capitalize on that potential and the fact it is initially focusing on improving workflow rather than just generating video — as it did with generative fill in Photoshop — is testament to that, and a sensible, if cautious approach.

Still said: “By bringing generative AI innovations deep into core Premiere Pro workflows, we are solving real pain points that video editors experience every day, while giving them more space to focus on their craft.”

What AI features are coming to Premiere Pro?

Aside from previously announced automatic labelling and audio improvement features — such as cleaning up background noise — Adobe confirmed it is bringing generative expansion and in-video editing to Premiere Pro.

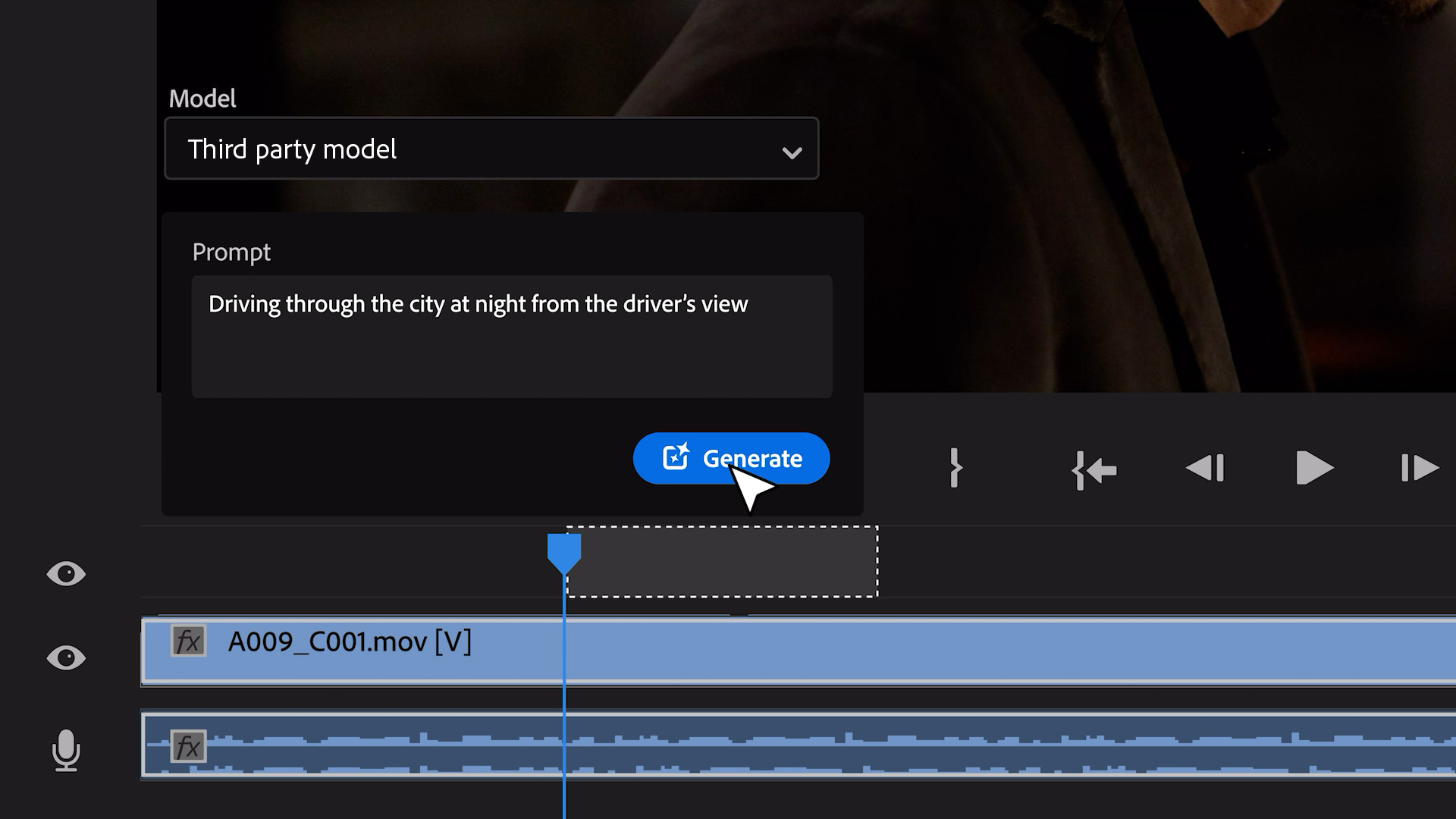

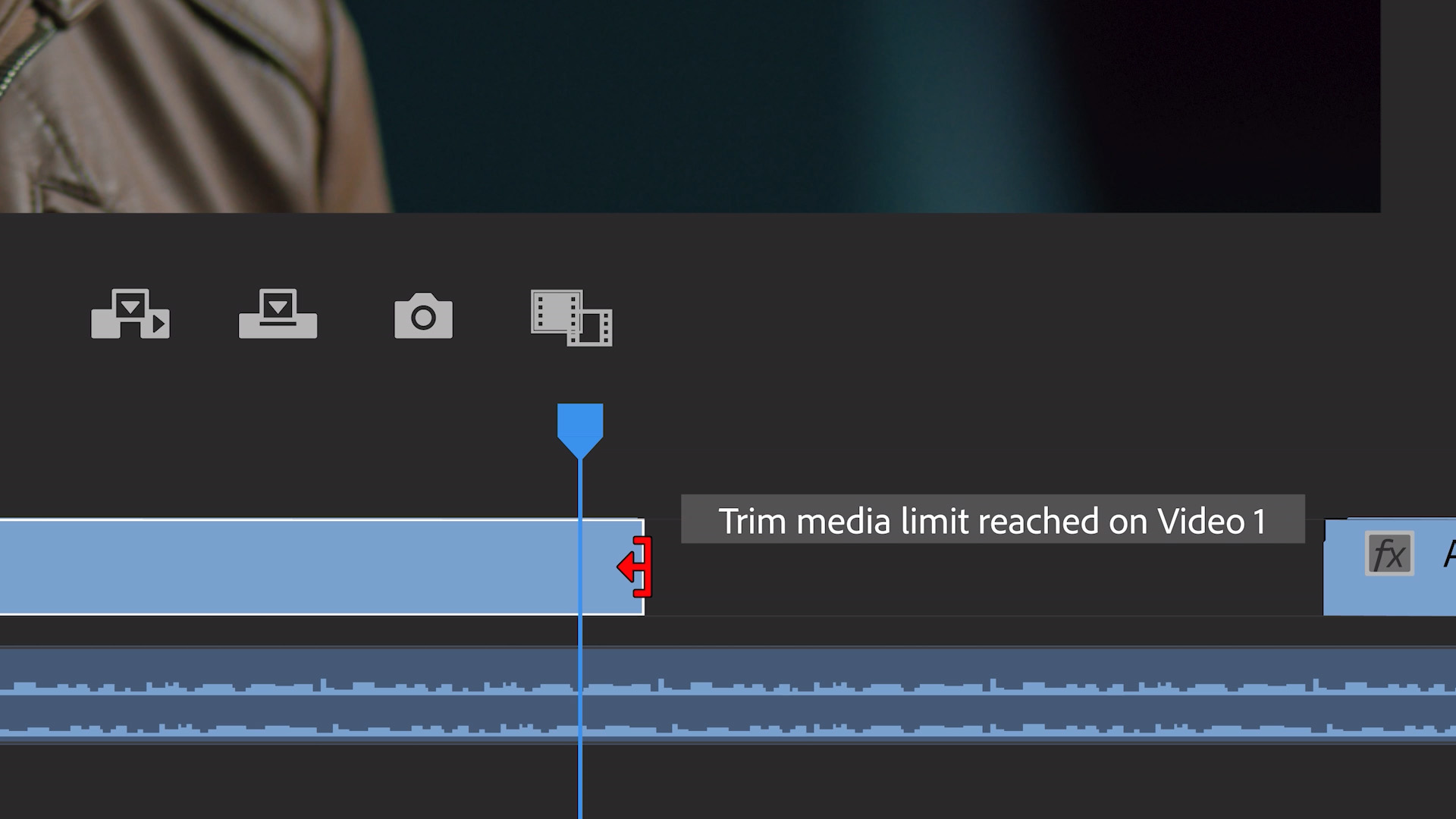

By default the generative expansion will use the Adobe Firefly AI video model due to launch later this year and takes the start of the clip you want to extend as source material and extends it by a few frames or seconds.

You drag it out as far as you want to extend it — such as to match a piece of audio — and the AI will do the rest, generating pixels to match what came before.

This is similar to generative fill in Photoshop where you can expand a canvas and have Firefly predict what should be in that space, generating an image to match.

What are the new AI video features for?

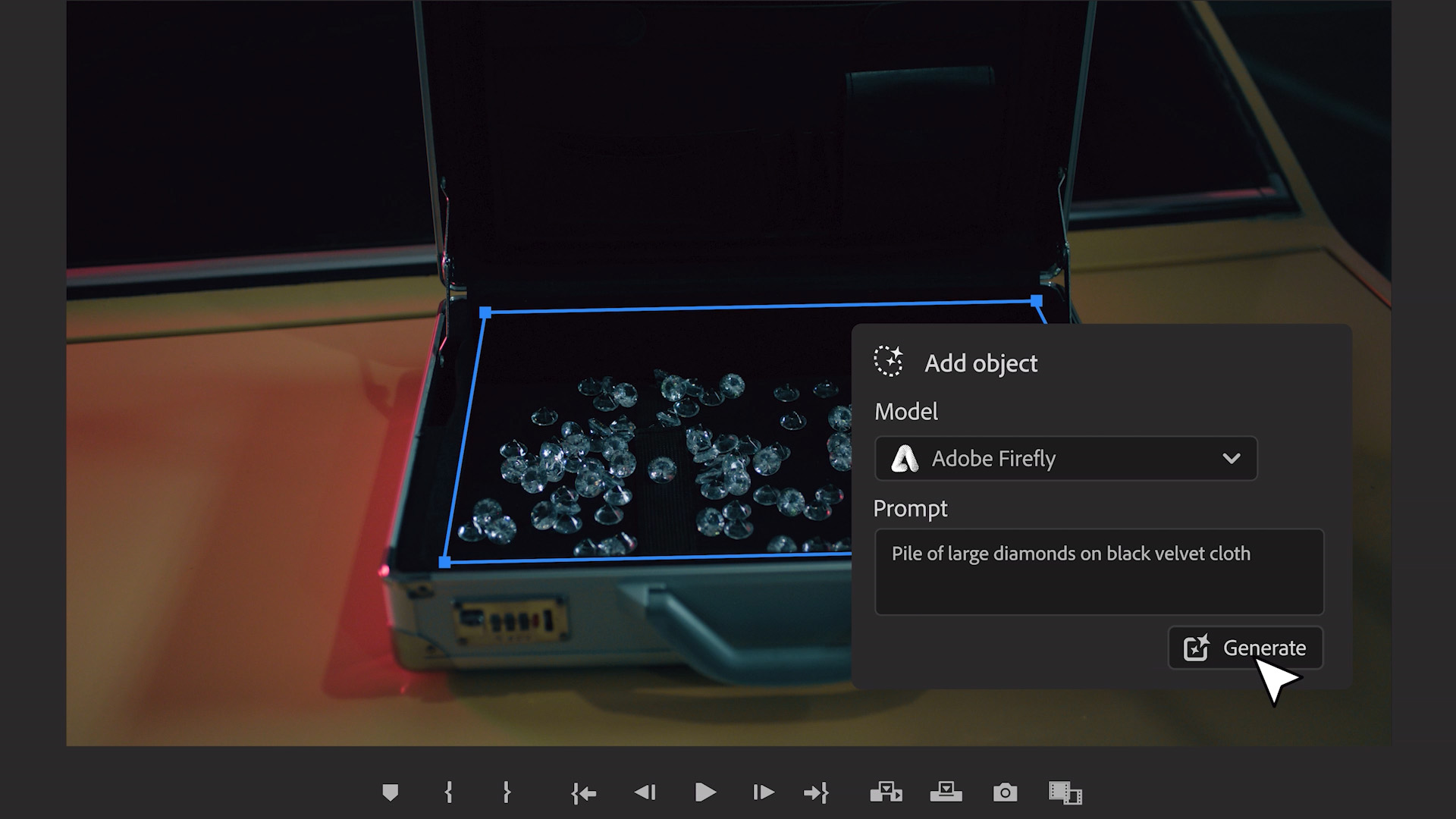

The other generative AI video feature is all about fixing what is already there. During a brief demo I was shown a box being removed from a scene and it being consistently gone across all frames of the clip — using nothing but a mouse click.

Another demo showed an object being added to a scene from nothing but a text prompt. These tools could be invaluable for editors looking to blur a face or remove an object that breaks the consistency of multiple shots — for example an out of place pot or car.

AI models will also be able to generate b-roll within Premiere Pro, according to Adobe. Using Sora, Runway or Pika Labs video editors may be able to create extra footage for a project without having to go out and film it.

In a video shared by Adobe to show the potential of these new capabilities it used Runway and Sora to create B-roll, its own model to remove an object and Pika Labs to extend a clip.

While none of this is available to the public, every example was produced using an internal version of Premiere Pro with real integration of the AI models.

More from Tom's Guide

- Best photo editing software in 2024

- Adobe AI image generator just went global with huge update — what you need to know

- Adobe just put generative AI into Photoshop — what you need to know

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?